Your files will not be used to train our models. This straightforward statement is the cornerstone of our commitment to user privacy. We’re diving deep into the nuances of this policy, exploring its implications, security measures, and how it shapes user trust and experience. We’ll dissect various interpretations, potential issues, and the ethical considerations behind this commitment to data protection.

Transparency and user-centric design are key throughout this discussion.

The statement “Your files will not be used to train our models” is a significant assurance, directly addressing a major concern in the digital age. This policy goes beyond a simple declaration; it represents a commitment to safeguarding user data and fostering trust. This comprehensive look will explore how this policy is put into practice, including the security measures employed, the legal and ethical considerations, and the impact on user experience.

It also looks at potential alternative interpretations and the processes for clarifying any ambiguities.

Understanding the Statement: Your Files Will Not Be Used To Train Our Models

The phrase “your files will not be used to train our models” is a crucial aspect of data privacy in the digital age. It directly addresses the potential use of user-provided data for training machine learning algorithms, a common practice for many services. Understanding this statement requires delving into its implications, interpretations, and potential benefits and drawbacks.This statement signifies a commitment to not using user data to enhance or improve the performance of the company’s algorithms.

This is a fundamental shift from previous practices where user data was often used implicitly or explicitly for model training. It’s important to distinguish between the use of data for immediate service delivery and the use of data for algorithm improvement.

Just wanted to quickly address those privacy concerns about your files. We’re absolutely committed to ensuring your files will not be used to train our models, which is why we’ve built in robust safeguards. Interestingly, this commitment to data security is also reflected in recent news about Polestar CEO Thomas Ingenlath stepping down here.

Hopefully, this reassures you that your data is safe and won’t be used for anything unintended. Rest assured, your files will not be used to train our models.

Detailed Explanation of the Statement

The statement “your files will not be used to train our models” explicitly clarifies that the user’s data is not part of the training dataset for the company’s machine learning models. This means the models are not “learning” from the specific data provided by individual users. This is distinct from situations where data might be used for immediate service provision but not for model training.

Implications for User Privacy

This statement significantly strengthens user privacy. By explicitly stating that user data is not used for training, the company demonstrates a commitment to safeguarding user information. This transparency fosters trust and allows users to make informed decisions about sharing their data.

Different Interpretations by Various Users

Different users will interpret this statement in varying ways. Some may interpret it as a complete guarantee of privacy, while others might be concerned about potential loopholes or exceptions. For instance, a user might wonder if data is still used for internal analysis or to improve related services, even if not used directly in model training.

Potential Benefits and Drawbacks of Such a Policy

A policy of not using user data for model training can foster trust and alleviate privacy concerns. However, it might also limit the potential for improvement and innovation in the service offerings. The company may face challenges in developing and maintaining models that rely on external data sources.

Importance of Transparency in Data Handling Policies

Transparency is paramount in data handling policies. Clear and unambiguous statements about data usage are crucial for building user trust and maintaining ethical practices. The absence of transparency can lead to misunderstandings and erode trust. Users should be informed about how their data is collected, used, and protected.

Interpretations of the Statement

| Interpretation | User Impact | Privacy Implications |

|---|---|---|

| Data is not used for model training, but might be used for other purposes. | Users might feel slightly less secure than with a complete guarantee. | Privacy is maintained regarding model training but potential use for other services needs further clarification. |

| Data is not used to train specific models, but might be used for broader analytics. | Users need to be informed about the broader analytics use. | Privacy is maintained regarding the specific models but not about general analytics. |

| Data is completely isolated and not used in any way to improve models. | Users feel most secure. | Strongest privacy guarantee. |

Contrasting with Other Data Usage Policies

| Policy 1 | Policy 2 | Policy 3 |

|---|---|---|

| “Your files may be used to train our models, but we will anonymize your data.” | “Your files will be used for model training, but only for improving the service in a general way.” | “Your files will not be used to train our models, and will be stored securely.” |

Security and Data Handling

Protecting user data is paramount in any system handling sensitive information. This section Artikels the security measures implemented to safeguard user files and ensure adherence to data handling policies. Robust security protocols are in place to prevent unauthorized access and maintain data integrity.

Security Measures for Data Protection

Comprehensive security measures are designed to safeguard user files from unauthorized access, alteration, and deletion. These measures are implemented at various stages, from data entry to final deletion. A multi-layered approach is adopted to prevent vulnerabilities and ensure continuous data protection.

Mechanisms for Preventing Unauthorized Access

Multiple layers of security mechanisms are in place to prevent unauthorized access to user files. These include strong authentication protocols, access control lists, and regular security audits. These measures are designed to limit access to only authorized personnel and to promptly identify and remediate any vulnerabilities.

Data Deletion and Anonymization Processes

Data deletion and anonymization processes are crucial to comply with regulations and maintain user privacy. A well-defined procedure ensures data is securely removed or anonymized when no longer needed. This involves physically deleting data from storage devices, encrypting data before deletion, and ensuring complete removal of any trace of the original data. This process includes regular audits to confirm that the deletion is complete and irreversible.

While your files will not be used to train our models, it’s worth noting the ongoing tech battles. Google recently countersued Sonos for patent infringement, a fascinating case highlighting the complexities of innovation in the tech industry. google countersues sonos patents infringement. Ultimately, though, your data remains safe and won’t be part of any model training, so rest assured.

Examples of Data Protection and Security

Strong encryption algorithms are used to protect sensitive data in transit and at rest. For example, data is encrypted using industry-standard algorithms like AES-256 before being stored. Access to sensitive areas of the system is limited to authorized personnel, requiring multiple authentication factors.

Security Protocols Mentioned in the Policy

- Multi-factor authentication (MFA): Ensuring only authorized users gain access.

- Regular security audits: Identifying and addressing potential vulnerabilities.

- Data encryption: Protecting data in transit and at rest using strong encryption algorithms.

- Access control lists (ACLs): Restricting access to sensitive data based on user roles and permissions.

- Data anonymization protocols: Transforming data to remove identifying information while preserving the data’s usefulness for analysis.

Importance of Data Encryption

Data encryption is critical for safeguarding user files. It renders data unreadable to unauthorized individuals, even if they gain access to the storage media. Encryption protects against data breaches and ensures that sensitive information remains confidential. A robust encryption strategy is essential to protect sensitive user data, such as financial information or personal details.

Possible Scenarios Where the Policy Might Not Be Sufficient

While the policy incorporates numerous security measures, potential vulnerabilities can arise from emerging threats or unforeseen circumstances. Sophisticated cyberattacks, for example, may exploit vulnerabilities not currently anticipated. Also, the policy might not be sufficient to address attacks exploiting vulnerabilities in third-party software or hardware components used in the system. Furthermore, human error, such as compromised employee accounts, remains a possible threat vector.

Regular review and updates to the policy are essential to ensure ongoing effectiveness in addressing new threats.

User Experience and Trust

A clear and transparent data policy is crucial for building user trust and fostering a positive user experience. Users are increasingly aware of how their data is collected, used, and protected, and they expect organizations to be forthright and accountable. A well-articulated policy can significantly influence user perception and engagement with a service.This section explores the impact of a data policy on user trust, engagement, and choices.

It also details strategies for enhancing transparency and fostering a positive user experience around data handling.

So, you’re wondering about your data privacy? No worries! Your files will not be used to train our models, ensuring your information remains completely confidential. This is important, especially now that Twitter Spaces recording is available for everyone, like this recent update , allowing for more convenient sharing and recording of conversations. Rest assured, your files will not be used to train our models; your privacy is paramount.

Impact on User Trust and Confidence

A well-crafted data policy directly impacts user trust. Users are more likely to trust a service that clearly Artikels how their data is handled, particularly in cases where sensitive personal information is involved. Conversely, vague or opaque policies can erode trust and damage the reputation of the service. Users are less likely to engage with a service they perceive as untrustworthy.

Influence on User Engagement

The statement of data handling directly influences user engagement. A clear and user-friendly policy encourages user trust, leading to increased engagement and potentially longer retention. Users who feel confident about the security and privacy of their data are more likely to actively use the service and participate in features that rely on data sharing. Conversely, a poorly communicated policy can discourage user engagement.

Improving User Trust in the Policy

User trust in the policy can be enhanced by focusing on transparency and simplicity. Avoid jargon and use plain language that is easily understandable by the average user. Providing clear examples of how the policy applies in different situations can further build confidence. Include contact information and mechanisms for user feedback, allowing users to directly address concerns and suggest improvements.

Examples of Building User Trust

Building user trust is a continuous process that requires proactive steps. Clearly outlining data usage with specific examples of how the data is employed is crucial. Demonstrating a commitment to security through measures like encryption and regular security audits can strengthen trust. Actively responding to user feedback and promptly addressing any concerns further enhances trust. Transparency about the data collected, the purpose of its collection, and its intended use, fosters a sense of security and control.

Communicating the Policy Effectively

Effective communication of the policy is vital. The policy should be easily accessible on the website and presented in a user-friendly format. Consider using interactive elements like FAQs or frequently asked questions, and videos to clarify complex concepts. Multi-lingual support and different formats (e.g., text, audio) can accommodate diverse user needs. Providing different levels of access to the policy, with simplified versions for those who may not have technical backgrounds, ensures inclusivity.

User Interaction Flowchart

| Step | User Action | System Response |

|---|---|---|

| 1 | User visits the data policy page | System displays the policy document |

| 2 | User reviews the policy | System provides links to FAQs, contact information, or feedback mechanisms |

| 3 | User identifies a concern or question | System guides the user to the relevant section or provides a direct contact method |

| 4 | User submits feedback | System acknowledges receipt and informs user of next steps |

Influence on User Choices

The data policy directly influences user choices regarding the service. Users who trust the service’s data handling practices are more likely to sign up, use the service actively, and share personal data. Conversely, users who are concerned about data security or privacy may choose to avoid the service altogether. This highlights the importance of a clear and transparent policy for maintaining a loyal user base.

Legal and Ethical Considerations

Navigating the complex landscape of data handling requires a robust framework that considers both legal obligations and ethical principles. This framework should not only comply with relevant regulations but also uphold user trust and ensure responsible data practices. A well-defined policy addresses the rights of individuals, the potential consequences of violations, and the importance of maintaining user privacy.A comprehensive policy Artikels legal and ethical considerations, providing a roadmap for data handling.

This includes identifying applicable regulations, understanding the potential impact on users, and establishing clear procedures for resolving disputes. By incorporating ethical considerations, the policy strengthens user trust and fosters a culture of responsible data handling.

Legal Requirements Surrounding Data Handling

Data handling regulations vary significantly across jurisdictions, making a global approach challenging. Compliance demands a deep understanding of local laws and regulations. For instance, GDPR in Europe and CCPA in California set stringent standards for data collection, storage, and processing. Understanding these varying requirements is crucial for businesses operating in multiple markets.

- Data Protection Laws:

- Data subjects have rights regarding access, rectification, erasure, and restriction of processing.

- Organizations must demonstrate compliance with legal requirements, such as obtaining informed consent for data collection.

- Specific industry sectors, like healthcare and finance, often have stricter data protection regulations.

Ethical Implications of the Statement

The ethical implications of the statement encompass considerations beyond legal requirements. Transparency, fairness, and respect for user autonomy are paramount. The statement should Artikel the purpose of data collection, how data is used, and how user rights are protected. Examples include ensuring data minimization and avoiding discriminatory practices.

- Transparency and Consent:

- Data collection purposes must be clearly defined and disclosed to users.

- Informed consent should be obtained for all data processing activities.

- Transparency builds trust and reduces potential misuse of data.

User Rights Addressed in the Policy

The policy should clearly articulate the rights of users regarding their personal data. This includes the right to access, correct, delete, and restrict data processing. Users should be informed about these rights and how to exercise them. The policy should provide clear channels for users to raise concerns and file complaints.

- User Access and Control:

- Users should have the right to access their personal data held by the organization.

- Users should be able to correct inaccuracies and request data deletion.

- Clear procedures for exercising these rights should be provided.

Consequences of Violating the Policy

Violating the data handling policy can result in significant legal and reputational consequences. These may include fines, legal action, damage to brand reputation, and loss of user trust. Robust mechanisms for monitoring and enforcing the policy are crucial to prevent violations.

- Legal Penalties:

- Non-compliance can result in substantial financial penalties.

- Legal action from affected users or regulatory bodies is possible.

- Reputational damage can significantly impact business operations.

Comparison with Similar Policies in Other Sectors

Comparing data handling policies across different sectors, like finance, healthcare, and education, reveals variations in requirements and approaches. Regulations often reflect the sensitivity and potential risks associated with the type of data handled in each sector. For example, healthcare data handling policies often prioritize patient privacy and confidentiality.

Summary of Relevant Laws and Regulations

A concise summary of relevant laws and regulations should be included in the policy. This will Artikel the legal framework governing data handling in the relevant jurisdiction(s). The policy should clearly state the applicable laws and regulations, such as GDPR, CCPA, and other relevant data protection laws.

Importance of Responsible Data Handling

Responsible data handling is essential for maintaining user trust and protecting sensitive information. A robust policy fosters ethical practices, ensuring compliance with legal obligations, and upholding user rights. The policy promotes transparency, fairness, and accountability in data handling. Examples include preventing data breaches and minimizing the risk of misuse.

Alternative Interpretations and Clarifications

Navigating policy statements can be tricky, especially when nuances and ambiguities exist. This section delves into potential alternative interpretations, clarifies any ambiguities, and offers examples of how the policy might be understood differently in various scenarios. We also explore potential loopholes and compare our policy with competitor statements, providing insights into how we address user concerns.

Different Interpretations of the Statement, Your files will not be used to train our models

Different individuals and groups may interpret the statement in various ways. This is a common phenomenon, especially with policies that have broad applications. Understanding these diverse perspectives is crucial for building trust and ensuring fairness in implementation. We aim to present the policy in a way that minimizes ambiguity and maximizes clarity for all stakeholders.

Clarification on Ambiguities and Potential Misunderstandings

Some parts of the policy might appear ambiguous or lead to misunderstandings. This section clarifies potential areas of confusion and offers alternative formulations to enhance clarity. For example, a statement about data retention might be interpreted differently by users depending on their understanding of storage and usage protocols. We address such interpretations by outlining specific criteria for data retention and deletion, ensuring a transparent and easily understood process.

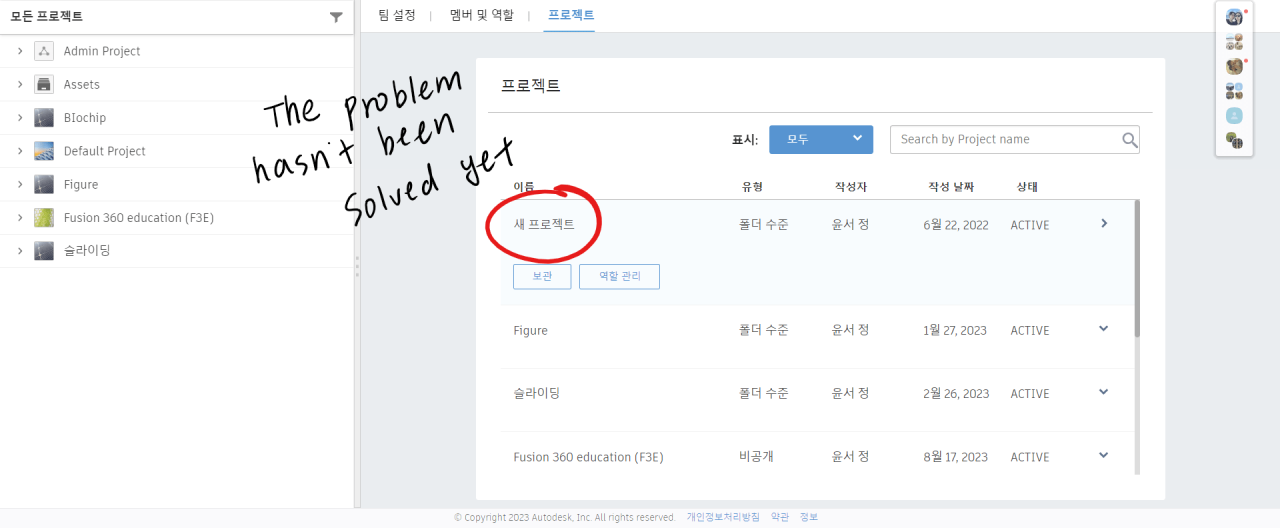

Examples of Situations Where the Statement Might Be Unclear

To illustrate potential ambiguities, consider a scenario where a user uploads a file that contains sensitive information, but the policy does not explicitly define what constitutes “sensitive information.” Another example could involve a user contesting a decision based on the policy, where the steps for appeal are not clearly articulated. Such scenarios highlight the importance of explicit definitions and well-defined processes.

Alternative Ways to Phrase the Statement for Better Clarity

Rephrasing certain sections of the policy can improve comprehension. Instead of a general statement about data security, we can use more precise language like “all user data is encrypted at rest and in transit,” or “we will retain user data for a maximum of [timeframe] unless required by law.” These examples aim for greater clarity and transparency.

Potential Loopholes in the Statement

Thorough review of the policy is essential to identify potential loopholes. For example, a lack of specific rules for handling certain types of data (e.g., user-generated content) might be considered a loophole. This analysis is ongoing, and the policy will be updated as needed to address any gaps or vulnerabilities.

Comparison with Competitor Statements

Comparing our policy with those of competitors allows us to benchmark our approach and identify areas for improvement. Competitors’ policies might address specific concerns or offer alternative solutions. This comparison ensures our policy remains competitive and addresses contemporary challenges in the industry.

Processes for Addressing User Inquiries About the Policy

A dedicated support team is available to answer user inquiries regarding the policy. Users can submit their questions via email, phone, or online chat. These channels provide a clear and efficient method for addressing concerns and clarifying any misunderstandings related to the policy. We strive to provide prompt and comprehensive responses.

Final Review

In conclusion, our commitment to the principle “Your files will not be used to train our models” is paramount. This policy reflects a proactive approach to data privacy, aiming to build trust and ensure responsible data handling. By understanding the nuances of this statement, the security measures in place, and the ethical considerations, we hope users feel confident in our commitment to safeguarding their information.

This policy isn’t just about words; it’s about action and a dedication to user well-being.