OpenAI machine vision adversarial typographic attacka clip multimodal neuron explores the fascinating yet concerning vulnerabilities in AI systems. This deep dive investigates how subtle typographic manipulations can deceive sophisticated machine vision models, particularly those using multimodal neurons. We’ll dissect the mechanisms behind these attacks, examining how OpenAI’s cutting-edge models fare against these increasingly sophisticated techniques. The implications are far-reaching, impacting everything from image recognition to security protocols.

The article will delve into the specifics of adversarial typographic attacks, explaining how slight alterations in font, character shapes, or even subtle distortions can completely mislead machine vision systems. We’ll see how these attacks work on multimodal neurons, highlighting the vulnerability of deep learning models when presented with manipulated data. Furthermore, the exploration extends to OpenAI’s role, evaluating the robustness of their models against these novel attacks, and comparing their performance with competing models.

Defining Adversarial Typographic Attacks in Machine Vision

Machine vision systems are increasingly relied upon for tasks ranging from automated quality control to medical image analysis. However, these systems are vulnerable to various forms of adversarial attacks, which manipulate input data to mislead the system. One such emerging class of attacks exploits the inherent characteristics of typography to subvert the intended functionality of machine vision algorithms.Adversarial typographic attacks are a specific type of adversarial input designed to fool machine vision models by subtly altering the visual representation of text or images.

OpenAI’s machine vision, with its adversarial typographic attacks on CLIP multimodal neurons, is fascinating. Think about how this technology could be used to manipulate images. Surprisingly, this hidden iOS feature lets you draw perfect shapes on your photos, demonstrating a similar level of precision in image manipulation , though on a much simpler scale. Ultimately, exploring these advanced image manipulation techniques, like those used in adversarial typographic attacks, is crucial for understanding and potentially mitigating the vulnerabilities of AI systems like OpenAI’s machine vision.

These attacks are distinct from other forms of adversarial input because they leverage the nuanced properties of typography, such as font styles, kerning, and letter spacing, to create imperceptible distortions that significantly impact the system’s interpretation. These manipulations are often imperceptible to the human eye but are potent in deceiving the algorithms.

Definition of Adversarial Typographic Attacks

An adversarial typographic attack in machine vision involves the deliberate alteration of typography within an image or text input, such as a document or a street sign, to mislead a machine vision system. These alterations are specifically designed to be imperceptible to the human eye while significantly changing the interpretation of the system. The subtle modifications exploit the system’s reliance on specific visual patterns associated with characters and their arrangement.

Characteristics Distinguishing These Attacks

Adversarial typographic attacks differ from other forms of adversarial input in their reliance on the intricate nuances of typography. While other methods might focus on pixel-level manipulations or adding noise, typographic attacks target the visual representation of text or characters, aiming to cause a misclassification or a flawed interpretation. The key is the specific nature of the changes; they exploit the way a machine vision system processes text or characters, making them different from simply adding noise.

Role of Typography in Creating These Attacks

Typography plays a critical role in these attacks. Font styles, kerning (the adjustment of space between letters), letter spacing, and even subtle variations in line height can all be exploited. For example, a slight change in the kerning of a character could make it appear visually similar to another character, yet produce a different interpretation by the system.

Attackers leverage the inherent ambiguity and slight variations in typographic elements to create visual illusions.

Vulnerabilities of Different Machine Vision Models

The vulnerabilities of machine vision models to these attacks depend on the specific architecture and training data. Models trained on limited datasets or those relying heavily on pixel-level features may be more susceptible. Convolutional Neural Networks (CNNs), which are frequently used in machine vision, can be vulnerable to these attacks because they often rely on the detection of local patterns and visual characteristics within an image.

For instance, models focused on character recognition or optical character recognition (OCR) are particularly susceptible.

Impact on Accuracy and Reliability

The impact of these attacks on the accuracy and reliability of machine vision systems can be significant. Successfully executed attacks can lead to misclassifications, incorrect interpretations, or even a complete failure of the system to perform its intended function. This can have serious implications in various domains, including security systems, medical diagnoses, and autonomous driving. For example, a modified street sign could cause a self-driving car to misinterpret the road conditions, potentially leading to an accident.

Similarly, a forged document with subtly altered typography could lead to fraudulent activity.

Exploring the Impact on Multimodal Neurons

Adversarial typographic attacks, while primarily targeting visual recognition models, have a significant impact on the workings of multimodal deep learning models. These models process information from multiple sources, like images and text, and combine these diverse inputs to achieve a richer understanding. When typographic elements are manipulated to mislead the model, it can disrupt this integrated understanding and affect the overall accuracy of the system.

The attack’s effectiveness depends heavily on the specific architecture of the multimodal neuron and the nature of the typographic manipulation.Multimodal neurons in deep learning models are designed to integrate information from various modalities. This integration is achieved by combining the activation patterns of neurons responding to different inputs. Typographic attacks, by altering the visual representation of the text component, can lead to subtle or significant changes in the activation patterns of these multimodal neurons.

These changes might cause the neuron to fire in a way that misrepresents the intended meaning, potentially leading to misclassifications or erroneous interpretations. The attack’s success hinges on the model’s susceptibility to these subtle visual variations within the multimodal input.

Impact on Activation Patterns

Typographic alterations directly affect the activation patterns of multimodal neurons. Font substitution, for example, might result in a slight shift in the activation levels of neurons associated with specific visual features of the text. The model might interpret the slightly different font as a meaningful change in the text’s visual representation. Similarly, character distortion can cause a significant deviation in activation patterns, potentially misleading the neuron into misinterpreting the text content.

Ever wondered about OpenAI’s machine vision, specifically adversarial typographic attacks on CLIP multimodal neurons? It’s a fascinating area of research. While you’re waiting for the latest tech, why not consider snagging a Google Pixel 6 or 6 Pro from a retailer like instead waiting forever google pixel 6 or 6 pro buy one these phones holidays instead ?

These phones offer great value, and the advancements in image recognition tech like CLIP multimodal neurons will continue to be impressive. So, whether you’re looking for a great phone or interested in cutting-edge AI research, there’s always something to explore!

This deviation from the expected activation pattern is crucial to understanding how adversarial attacks exploit the model’s vulnerabilities.

Potential for Misleading Multimodal Neurons

Adversarial typographic attacks can effectively mislead multimodal neurons by creating subtle, yet impactful, distortions in the visual representation of text within the multimodal input. This manipulation can confuse the model’s interpretation, especially when the model is designed to rely heavily on visual cues from the text component. The model might misinterpret the distorted text, potentially leading to a misalignment between the model’s interpretation of the multimodal input and the actual intended meaning.

For example, a subtle blurring of characters in a caption accompanying an image could cause the model to incorrectly associate the image with a different concept.

Impact on System Performance

The manipulation of typography in adversarial attacks can have a detrimental impact on the overall performance of a multimodal system. The system’s ability to correctly process and interpret multimodal data is directly affected by the success of the typographic attack. If the attack effectively alters the activation patterns of multimodal neurons, it can lead to misclassifications, incorrect interpretations, or a significant reduction in the system’s accuracy.

This reduction in accuracy can have substantial real-world implications, particularly in applications where accurate multimodal understanding is critical.

Typographic Manipulation Techniques

| Attack Type | Typographic Manipulation | Impact on Multimodal Neuron | Example |

|---|---|---|---|

| Font Substitution | Replacing a font with a similar but subtly different one. | Slight shift in activation pattern. | Substituting Arial with Calibri. |

| Character Distortion | Deliberately distorting characters in the image. | Significant deviation in activation pattern. | Slightly blurring or stretching characters. |

| Color Manipulation | Altering the color of text elements in an image. | Potentially significant change in activation pattern, depending on the model’s weighting of color cues. | Changing the color of text from black to dark gray. |

| Layout Alteration | Modifying the spatial arrangement of text elements. | Shift in activation patterns, potentially affecting the model’s understanding of the context. | Slightly shifting the position of text within an image. |

OpenAI’s Role in Machine Vision: Openai Machine Vision Adversarial Typographic Attacka Clip Multimodal Neuron

OpenAI has significantly impacted the field of machine vision through its pioneering work in developing and deploying advanced models. Their research and applications have pushed the boundaries of what’s possible in computer understanding of images and videos, leading to numerous practical applications. From image generation to object recognition, OpenAI’s models have garnered considerable attention and adoption.OpenAI’s contributions extend beyond simply creating models; they’ve also actively contributed to the broader research community by releasing their code and datasets, fostering collaboration and innovation.

This approach has facilitated rapid progress in the field and has helped to establish OpenAI as a key player in the advancement of machine vision technology.

OpenAI’s Machine Vision Models

OpenAI has developed a range of sophisticated machine vision models, each designed for specific tasks. These models utilize various architectures and techniques to achieve high performance in image recognition, object detection, and other related tasks. Their models have been instrumental in advancing the capabilities of machine vision systems.

Specific OpenAI Models and their Applications

Several OpenAI models have made significant strides in machine vision. For example, DALL-E 2 and CLIP excel in image generation and image-text matching, respectively. These models are based on transformer architectures, which have demonstrated exceptional performance in various natural language processing and computer vision tasks. Other models, such as those employed in robotics and autonomous driving, are designed for real-time object recognition and scene understanding.

Vulnerability to Adversarial Typographic Attacks

Adversarial attacks targeting machine vision models are a growing concern. Typographic attacks, in particular, focus on subtly altering input data to deceive the model, potentially leading to incorrect or dangerous outcomes. OpenAI’s models, while generally robust, are not immune to these attacks. The intricate architecture of these models can make them susceptible to manipulation, especially when dealing with complex or ambiguous visual information.

Comparison with Competing Models

The robustness of machine vision models against adversarial typographic attacks varies significantly. Factors such as the model architecture, training data, and optimization techniques play a crucial role. OpenAI’s models, while powerful, might exhibit varying levels of susceptibility compared to models developed by other organizations. A comparative analysis is crucial to understanding the strengths and weaknesses of different approaches.

Robustness Comparison Table

| Model | Robustness | Vulnerability to Typographic Attacks | Mitigation Strategies |

|---|---|---|---|

| OpenAI DALL-E 2 | High | Potentially vulnerable to targeted attacks | Requires further investigation into adversarial examples; training on diverse datasets |

| OpenAI CLIP | Medium | Susceptible to text-based perturbations | Defense mechanisms focused on robust text embeddings are needed. |

| Google Vision API | High | Generally less vulnerable | Regular updates and enhancements to adversarial training. |

| Microsoft Azure Computer Vision | Medium | Vulnerable to certain typographic manipulations | Emphasis on continuous improvement and defense against attacks. |

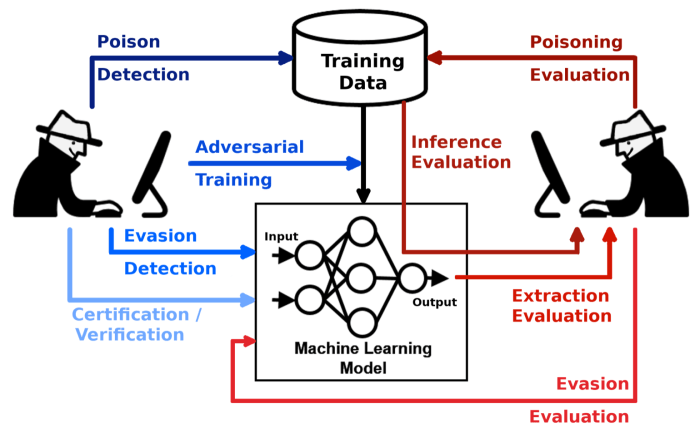

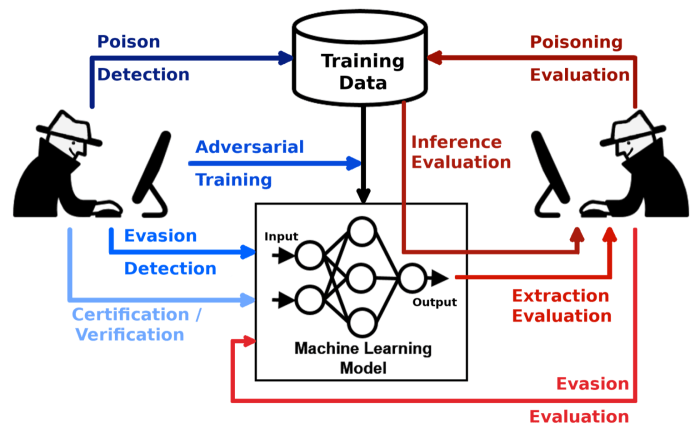

Techniques for Detection and Mitigation

Adversarial typographic attacks, while subtle, pose a significant threat to the reliability of machine vision systems. These attacks exploit the vulnerabilities of machine learning models by subtly altering input data to misdirect the system’s interpretation. Robust defenses are crucial to ensure the accuracy and trustworthiness of machine vision applications in diverse real-world scenarios.Effective detection and mitigation strategies are vital to protect against these attacks.

Understanding the intricacies of these attacks and developing countermeasures is essential for safeguarding the integrity of machine vision systems.

Methods for Detecting Adversarial Typographic Attacks

Detecting these attacks requires a multifaceted approach. Traditional image analysis techniques are often insufficient. Specialized algorithms are necessary to identify subtle modifications that might go unnoticed by the human eye. Key methods include:

- Statistical Analysis: Analyzing the statistical properties of the image, such as pixel distributions, color histograms, and texture features, can reveal anomalies. Deviations from the expected patterns, indicative of adversarial manipulation, can trigger alerts.

- Deep Learning-based Detection: Training separate models specifically to detect adversarial examples is effective. These models learn to recognize patterns and characteristics indicative of adversarial modifications. This approach often leverages pre-trained deep learning architectures for efficient feature extraction.

- Gradient-Based Methods: Identifying areas of the image where small changes lead to significant alterations in the model’s predictions can pinpoint adversarial alterations. Techniques like evaluating the gradient of the model’s output with respect to the input image pixels can reveal these vulnerabilities.

Mitigation Procedures for Adversarial Typographic Attacks

Mitigating adversarial attacks involves implementing strategies to strengthen the resilience of machine vision models. These approaches focus on making models less susceptible to manipulation and more robust to variations in input data.

- Adversarial Training: Training machine vision models on data augmented with adversarial examples. This approach strengthens the model’s resistance to various perturbations by forcing it to learn more robust representations of the data.

- Ensemble Methods: Combining multiple machine vision models to make predictions. This approach reduces the impact of individual model vulnerabilities, as the prediction from multiple models can act as a form of consensus. The final prediction is typically the average or mode of the predictions from the individual models.

- Input Preprocessing: Applying filters and techniques to cleanse the input data from potentially malicious or misleading modifications. This might include noise reduction, data normalization, or other preprocessing steps to improve the quality and reduce potential for adversarial attacks.

Best Practices for Building Robust Machine Vision Systems

Implementing these practices can bolster the resilience of machine vision systems against adversarial typographic attacks.

OpenAI’s machine vision, with its adversarial typographic attacks on CLIP multimodal neurons, is fascinating. It’s intriguing how these attacks work, but the recent availability of Snapchat Spectacles in Europe, available for online purchase at snapchat spectacles available europe release online , makes me wonder if similar vulnerabilities exist in their image processing. Perhaps future research could explore the intersection of these advanced vision technologies and consumer-facing augmented reality devices.

- Regular Model Evaluation: Continuously assessing the performance of machine vision models against various datasets, including adversarial examples. Regular evaluations can identify potential vulnerabilities and areas requiring improvement.

- Security Audits: Performing rigorous security audits to identify potential vulnerabilities in the machine vision system. This proactive approach involves systematically analyzing the system for weaknesses that could be exploited by attackers.

- Robustness Testing: Thorough testing with various adversarial examples and data perturbations is essential to validate the system’s resistance to attacks. This approach helps to identify the system’s limits and strengths in handling adversarial perturbations.

Automated System for Adversarial Attack Identification

An automated system for identifying adversarial typographic attacks in images can be designed by combining multiple detection techniques. This system would involve:

- Image Preprocessing: Applying preprocessing techniques to standardize input images and enhance the accuracy of subsequent analysis.

- Feature Extraction: Using algorithms to extract key features from the images that can be used for analysis.

- Adversarial Detection Module: Implementing a dedicated module employing the aforementioned detection techniques (statistical analysis, deep learning, gradient-based methods). This module would flag images with suspicious characteristics.

Strengthening Machine Vision Models Against Attacks

Strengthening machine vision models involves several key approaches:

- Robust Loss Functions: Using loss functions that are less sensitive to adversarial perturbations. These loss functions prioritize the learning of more robust representations.

- Adversarial Training Techniques: Using advanced adversarial training methods, such as projected gradient descent or fast gradient sign method, to improve the model’s resistance to adversarial examples.

- Regularization Techniques: Implementing regularization techniques like dropout or weight decay to prevent overfitting and improve generalization, thereby making the model more robust to various input variations.

Case Studies and Examples

Adversarial typographic attacks, while often theoretical, have real-world implications for machine vision systems. These attacks leverage the vulnerabilities of AI models to misclassify images, leading to potentially disastrous consequences in various applications. This section explores real-world examples, potential scenarios for harm, and the techniques used to manipulate images for malicious intent.Understanding how these attacks work is crucial to mitigating their risks.

Sophisticated manipulations of seemingly benign images can deceive AI systems, leading to incorrect interpretations. The consequences can range from minor inconveniences to significant security breaches, highlighting the importance of robust defenses.

Real-World Application Examples

AI-powered systems are increasingly integrated into critical infrastructure, from self-driving cars to medical imaging. The potential for adversarial typographic attacks to disrupt these systems is substantial. For instance, an attacker might subtly modify a stop sign image to trick a self-driving car into proceeding, potentially leading to a catastrophic accident.Similarly, in medical imaging, an adversarial attack on an X-ray could cause misdiagnosis, delaying or preventing proper treatment.

The implications for human safety are significant, and robust countermeasures are needed.

Consequences in Practical Settings

The consequences of adversarial typographic attacks can be far-reaching, impacting various sectors and potentially causing severe harm. A compromised system could lead to:

- Autonomous Vehicle Accidents: Subtle alterations to road signs, traffic signals, or even pedestrians could cause autonomous vehicles to misinterpret the environment, leading to collisions.

- Security Breaches: Malicious actors could use these attacks to bypass security systems that rely on image recognition, gaining unauthorized access to sensitive areas or data.

- Medical Misdiagnosis: Adversarial attacks on medical images, like X-rays or CT scans, could lead to misdiagnosis, delaying or preventing proper treatment and potentially causing harm to patients.

- Financial Fraud: Manipulation of images used in financial transactions could lead to fraudulent activities, such as unauthorized withdrawals or transfers.

These examples demonstrate the severity of the potential risks. The sophistication of these attacks is constantly evolving, requiring continuous monitoring and improvement of defenses.

Scenarios for Detrimental Attacks

The potential for adversarial typographic attacks extends far beyond the examples discussed. Consider these scenarios:

- Surveillance Systems: Altering surveillance footage could allow individuals to evade detection or manipulate the interpretation of events.

- Border Control: Manipulating passport or visa images could potentially allow unauthorized entry into a country.

- Criminal Investigations: Altering images of crime scenes or suspects could lead to misidentification or inaccurate conclusions.

- Law Enforcement: Manipulating images used in criminal investigations could potentially lead to the wrong person being apprehended or an investigation being derailed.

The scenarios highlight the diverse and potentially catastrophic consequences of such attacks. The ease of generating adversarial examples necessitates a comprehensive approach to defense.

Malicious Uses of Typographic Manipulation, Openai machine vision adversarial typographic attacka clip multimodal neuron

Adversarial typographic attacks are not merely theoretical exercises; they can be exploited for malicious purposes. Consider the following:

- Identity Theft: Altering images of identification documents could enable the creation of fraudulent identities.

- Financial Fraud: Manipulating images used in online banking or financial transactions could lead to unauthorized access or theft.

- Cyber Warfare: These attacks could be used as part of larger cyber warfare campaigns, disrupting critical infrastructure or causing widespread chaos.

These malicious uses emphasize the need for robust security measures to prevent these attacks.

Illustrations of Typographic Manipulations

Adversarial examples can be created through various typographic manipulations, including:

- Noise Injection: Adding subtle, imperceptible noise patterns to an image can cause a machine vision system to misclassify it.

- Color Shifts: Slight changes in the color palette of an image can lead to incorrect interpretations.

- Geometric Distortions: Subtle modifications to the shape or geometry of an image can alter its perceived meaning.

These manipulations, while seemingly minor, can have significant effects on AI systems, highlighting the need for robust defense mechanisms.

Last Recap

In conclusion, the study of openAI machine vision adversarial typographic attacka clip multimodal neuron underscores the crucial need for robust defense mechanisms in machine vision systems. We’ve seen how easily these models can be tricked by carefully crafted adversarial examples, showcasing the importance of continuous research and development in strengthening AI systems. The future likely holds a complex interplay between attackers and defenders, highlighting the ongoing challenge in building truly reliable and secure machine vision solutions.