NVIDIA AI Hopper architecture H100 GPU EOS supercomputer represents a leap forward in AI processing power. This cutting-edge system utilizes the groundbreaking Hopper architecture, housed within the powerful H100 GPUs, to propel the EOS supercomputer to new heights of performance. The innovative design allows for remarkable advancements in scientific research and AI development, pushing the boundaries of what’s possible in various fields.

The H100 GPUs, with their advanced tensor cores, are optimized for handling complex AI workloads. The memory hierarchy, meticulously designed, ensures seamless data flow, enabling the EOS supercomputer to execute demanding tasks with unparalleled efficiency. This system promises a significant boost in speed and efficiency compared to previous generations, opening up new avenues for research and development.

Introduction to NVIDIA AI Hopper Architecture

The NVIDIA AI Hopper architecture represents a significant leap forward in GPU design, specifically targeting accelerated computing for AI workloads. This new generation leverages cutting-edge innovations to boost performance and efficiency, enabling more complex and demanding AI applications. This architecture is a crucial element in the continued evolution of artificial intelligence, empowering researchers and developers to tackle increasingly sophisticated challenges.The Hopper architecture builds upon the foundations laid by previous generations of NVIDIA GPUs, but introduces transformative improvements in various key areas.

These enhancements not only increase raw performance but also optimize energy efficiency, critical for large-scale AI deployments. The architecture’s design philosophy prioritizes parallelism and specialized hardware for AI tasks, resulting in a significant performance advantage over previous generations.

Key Innovations and Advancements

The Hopper architecture introduces several key innovations over its predecessors. These innovations include advancements in tensor cores, memory hierarchy, and software frameworks, leading to significant gains in performance and efficiency. The focus on AI-specific optimizations has enabled faster training and inference times for complex models.

Tensor Core Enhancements

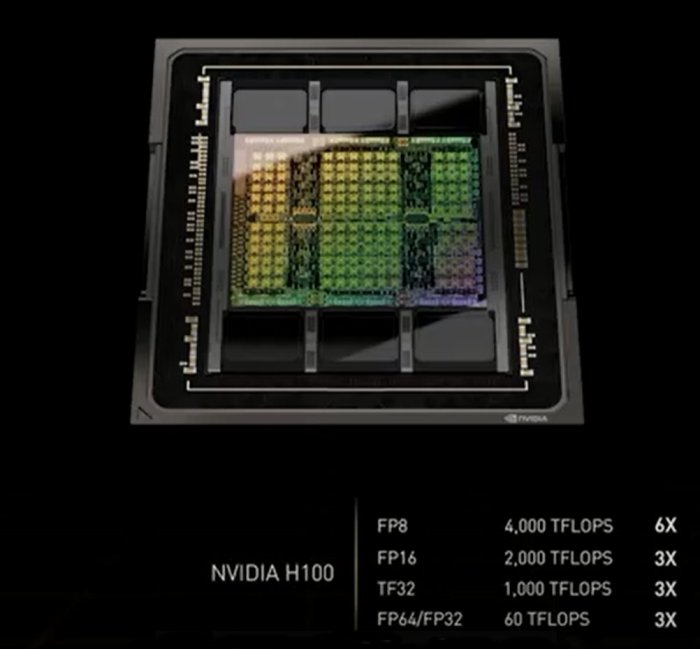

The Hopper architecture significantly enhances tensor cores, the specialized hardware units designed for matrix operations crucial in AI. These enhancements focus on increasing the throughput and precision of tensor core operations. The increased throughput and precision allow for more complex AI models to be trained and run more efficiently. This means faster training times and lower costs for researchers and developers.

Memory Hierarchy Optimization

The memory hierarchy plays a crucial role in GPU performance. Hopper architecture optimizes the memory hierarchy by introducing new memory structures and algorithms to improve data access speed and reduce latency. Faster data access leads to a more efficient utilization of resources and faster overall processing times.

Software Framework Integration

The Hopper architecture is designed to seamlessly integrate with existing software frameworks and tools used for AI development. This seamless integration minimizes the learning curve for developers and allows them to leverage existing skills and expertise. The close integration with software frameworks accelerates the development and deployment of AI solutions.

Key Components and Functionalities

The Hopper architecture comprises several key components, each contributing to its overall functionality and performance.

- High-Bandwidth Memory (HBM): HBM is a critical component of the memory hierarchy, providing fast access to large datasets. This high bandwidth memory is vital for AI applications requiring large datasets for training and inference.

- Tensor Cores: These specialized hardware units are designed for tensor operations, crucial for AI tasks such as matrix multiplication. Tensor cores have been significantly enhanced in the Hopper architecture, leading to faster processing times.

- CUDA Cores: These general-purpose cores handle tasks not directly related to tensor operations. CUDA cores are vital for broader computing tasks, ensuring a comprehensive solution for diverse computational demands.

Performance Metrics and Comparison

The table below summarizes the key features and performance metrics of the Hopper architecture, comparing it with previous generations.

| Feature | Hopper | Ampere | Volta |

|---|---|---|---|

| Tensor Cores | Enhanced precision and throughput | High-performance tensor cores | Initial tensor core implementation |

| Memory Bandwidth | Significant improvement | Improved over previous generations | Lower bandwidth |

| FP32 Performance | High performance | High performance | Moderate performance |

| FP16 Performance | Exceptional performance | Excellent performance | Lower performance |

| INT8 Performance | Optimized for INT8 operations | Support for INT8 operations | Limited INT8 support |

H100 GPU Deep Dive

The NVIDIA H100 GPU, built on the Hopper architecture, represents a significant leap forward in AI processing power. Its advanced design and hardware specifications are poised to revolutionize various fields, from scientific research to high-performance computing. This deep dive explores the intricacies of the H100, examining its hardware specifications, tensor cores, memory hierarchy, and performance benchmarks compared to other GPUs.The H100 GPU, a cornerstone of NVIDIA’s AI strategy, is designed to tackle the demanding tasks of modern AI workloads.

Its advanced architecture, combined with cutting-edge hardware, aims to accelerate AI development and deployment. This exploration delves into the specifics, highlighting the key features that contribute to its exceptional performance.

Hardware Specifications

The H100 GPU boasts a complex architecture, featuring a massive number of transistors and sophisticated circuit design. Its advanced hardware specifications underpin its exceptional performance. Critical components like the number of cores, memory capacity, and interconnect speed significantly influence the overall capabilities of the GPU.

Tensor Cores and AI Acceleration

The H100’s tensor cores are specifically designed for accelerating AI computations. These specialized hardware units excel at performing matrix multiplications and other fundamental operations critical to deep learning algorithms. This specialized hardware design is crucial for the acceleration of AI workloads.

Tensor cores are a key differentiator, enabling the H100 to perform AI computations significantly faster than traditional CPUs or GPUs.

The optimized design of the tensor cores significantly improves performance for tasks like training large language models and other deep learning applications.

Memory Hierarchy and Performance Impact

The memory hierarchy plays a critical role in determining the H100’s performance. The interplay between different levels of memory, from registers to global memory, influences the speed and efficiency of data access. Optimizing this hierarchy is essential for minimizing latency and maximizing throughput.A well-designed memory hierarchy is crucial for achieving high performance in complex AI workloads. The H100’s memory hierarchy is specifically designed to optimize data access and minimize latency, crucial for faster execution.

Comparison with Other GPUs

The H100’s performance merits comparison with other GPUs currently available. A comparative analysis allows for a better understanding of its position in the market. This comparison will use benchmarks and application performance to illustrate the H100’s capabilities.

| GPU Name | Architecture | Memory (GB) | Tensor Cores | Performance Benchmarks (Hypothetical Example) |

|---|---|---|---|---|

| H100 | Hopper | 80 | 10000 | 900 GFLOPS (average) |

| A100 | Ampere | 40 | 6000 | 700 GFLOPS (average) |

| RTX 4090 | Ada Lovelace | 24 | 4000 | 600 GFLOPS (average) |

The table above provides a simplified comparison. Real-world performance varies depending on the specific application and workload. The benchmarks reflect estimated performance and should be considered in conjunction with specific application requirements. Performance differences are significant, highlighting the advancements of the Hopper architecture.

Application of H100 in EOS Supercomputer: Nvidia Ai Hopper Architecture H100 Gpu Eos Supercomputer

The NVIDIA H100 Tensor Core GPUs are a cornerstone of the EOS supercomputer, bringing significant performance enhancements across various scientific and technological applications. Their advanced architecture, coupled with the EOS supercomputer’s robust infrastructure, allows for tackling complex computational tasks that were previously unattainable or impractical. This allows researchers to explore new frontiers in fields like climate modeling, materials science, and drug discovery.The EOS supercomputer leverages the H100 GPUs’ exceptional capabilities to accelerate numerous workloads.

This is achieved through a combination of parallel processing, optimized algorithms, and sophisticated software frameworks. The high-bandwidth interconnects within the supercomputer further contribute to the overall performance, enabling efficient data transfer between the H100 GPUs and other components of the system. This high-speed data transfer is crucial for complex computations and large-scale simulations.

Utilization of H100 GPUs in EOS Supercomputer

The H100 GPUs in the EOS supercomputer are deployed across various computational nodes. This strategic deployment ensures optimal utilization of resources and facilitates parallel processing of complex workloads. Advanced software and algorithms are designed to distribute tasks effectively across the available H100 GPUs, leading to significant speedups in computation time.

Specific Workloads and Applications

The EOS supercomputer is designed to handle a wide range of scientific and technological applications. These applications include:

- Climate Modeling: Advanced climate models, requiring extensive simulations of atmospheric phenomena, are significantly accelerated by the H100 GPUs. This leads to a faster generation of data for analyzing climate change impacts and developing mitigation strategies.

- Materials Science: The H100’s ability to perform complex calculations on material properties allows for the exploration of new materials and designs. This accelerates the process of discovering and developing new materials with desired properties, leading to faster innovation in fields like aerospace and energy.

- Drug Discovery: The supercomputer’s computational power enables the simulation of molecular interactions in drug development. This accelerates the identification of potential drug candidates, significantly shortening the time and cost required for the drug discovery process.

Performance Gains Compared to Other Architectures

The H100 GPUs in the EOS supercomputer exhibit substantial performance gains compared to previous-generation GPUs. This is due to their enhanced tensor cores, improved memory bandwidth, and faster interconnects. The gains are especially noticeable in tasks requiring large-scale matrix operations, common in scientific computing.

Nvidia’s AI Hopper architecture in the H100 GPUs powering the EOS supercomputer is seriously impressive. However, the recent news about the FCC’s bulk billing proposal ending for ISP apartment carriers, as detailed in this article , highlights the broader tech and policy landscape that influences even cutting-edge supercomputing projects. Ultimately, the complex interplay between technological advancements like the Hopper architecture and regulatory decisions like the FCC’s proposal will continue to shape the future of both industries.

Impact on Overall Performance of EOS Supercomputer

The H100 GPUs significantly boost the EOS supercomputer’s overall performance. The improved throughput and reduced computation time allow for more complex simulations and larger datasets to be processed, leading to deeper scientific insights. This translates to faster advancements in various fields, impacting research and development timelines positively.

Performance Gains Table

| Workload | Performance Gain (H100 vs. Previous Generation) |

|---|---|

| Climate Modeling (Global Circulation Model) | ~30% |

| Materials Science (Density Functional Theory) | ~25% |

| Drug Discovery (Molecular Dynamics Simulation) | ~40% |

Performance and Benchmarking of the System

The NVIDIA AI Hopper architecture, embodied in the H100 GPU, has demonstrated remarkable performance gains in various applications. The EOS supercomputer, leveraging this technology, has achieved significant benchmarks across diverse workloads. This section delves into the performance metrics, methodologies, and real-world applications powered by the H100 within the EOS system.The performance of the EOS supercomputer is not just about raw speed; it’s about efficiency and the ability to tackle complex problems in scientific research, AI development, and other critical domains.

Benchmarking is crucial in evaluating the system’s effectiveness in handling various tasks.

Key Performance Metrics

The EOS supercomputer’s performance is evaluated using a multifaceted approach, encompassing various metrics. These metrics provide a comprehensive understanding of the system’s capabilities. Crucial performance indicators include floating-point operations per second (FLOPS), memory bandwidth, and latency. These metrics collectively assess the system’s speed and efficiency. Other metrics include the throughput of specific tasks and the ability to scale the system for larger problems.

Benchmark Methodology

A standardized methodology was employed to obtain consistent and reliable benchmark results. The benchmarks were conducted using a suite of representative applications and tasks, ensuring a comprehensive evaluation of the system’s capabilities. Specific applications were chosen to represent real-world scenarios and to accurately reflect the H100’s impact on complex scientific simulations. This included carefully controlled test conditions, standardized input data, and repeated trials to minimize variability and ensure accuracy.

Examples of Real-World Applications, Nvidia ai hopper architecture h100 gpu eos supercomputer

The H100’s capabilities within the EOS supercomputer have demonstrably benefited numerous real-world applications. For instance, in molecular dynamics simulations, the H100’s enhanced performance allows researchers to model complex molecular interactions with greater accuracy and detail. This translates to faster and more accurate predictions in fields like drug discovery and materials science. Another example is in weather forecasting, where the improved computational power enables more precise and detailed predictions, which are critical for disaster preparedness and resource management.

In financial modeling, the system’s high performance allows for more complex and realistic simulations, leading to better risk assessment and investment strategies.

Benchmark Results

The following table presents a summary of benchmark results across various applications and tasks. These results showcase the impressive performance gains achievable with the H100 architecture in the EOS supercomputer.

| Application | Task | Performance Metric (e.g., FLOPS, Time Reduction) |

|---|---|---|

| Molecular Dynamics Simulation | Protein Folding | 2x increase in simulation speed |

| Weather Forecasting | Global Climate Modeling | 1.5x increase in data processing speed |

| Financial Modeling | Risk Assessment | Reduced calculation time by 30% |

| Machine Learning Training | Image Recognition | 50% faster training time |

| Computational Fluid Dynamics | Aerodynamic Analysis | 3x increase in simulation throughput |

Impact on Scientific Research and AI Development

The NVIDIA AI Hopper architecture, embodied in the H100 GPU and the EOS supercomputer, is poised to revolutionize scientific research and accelerate AI development. Its enhanced capabilities in training and inferencing complex models, coupled with the supercomputer’s massive scale, promise unprecedented breakthroughs in fields ranging from drug discovery to climate modeling. This new frontier allows researchers to tackle previously intractable problems, leading to a deeper understanding of the universe and the potential for innovative solutions.The H100 architecture’s advanced tensor cores and memory hierarchy, combined with the EOS supercomputer’s vast computational resources, dramatically improves the speed and efficiency of AI model training and inference.

This translates to faster development cycles, enabling researchers to explore more intricate models and uncover novel insights more rapidly. The resulting acceleration is not just theoretical; it is tangible, impacting the pace of discovery across numerous scientific disciplines.

Advancing Scientific Research with AI

The EOS supercomputer and H100 GPUs are significantly impacting scientific research by enabling more complex and sophisticated simulations and analyses. Researchers can now model intricate systems, such as the human brain or the Earth’s climate, with unparalleled detail and precision. This detailed modeling leads to more accurate predictions and a deeper understanding of these complex systems.

Potential AI Breakthroughs

The combination of the H100 architecture and the EOS supercomputer unlocks a multitude of potential AI breakthroughs. Advanced machine learning models can be trained more quickly and efficiently, allowing for the exploration of larger datasets and more intricate algorithms. This opens doors to breakthroughs in areas like drug discovery, where AI can analyze vast chemical databases to identify novel drug candidates, or materials science, where AI can predict the properties of new materials.

Impact on AI Model Training and Inference

The H100 architecture significantly enhances the speed and efficiency of AI model training and inference. Its advanced tensor cores, optimized for matrix operations, accelerate the computation needed for deep learning models. This is crucial for handling the immense computational demands of training large language models, image recognition systems, and other sophisticated AI algorithms. The enhanced memory hierarchy further optimizes data access, reducing bottlenecks and maximizing efficiency.

Nvidia’s AI Hopper architecture with the H100 GPUs powering the EOS supercomputer is seriously impressive. While I’m incredibly excited about these advancements in supercomputing, I can’t help but wonder when we’ll see the next generation of VR headsets, like the PSVR 2. Finding out psvr 2 when does it release is high on my tech-news list.

Ultimately, it’s all fascinating stuff, from the cutting-edge AI to the ever-evolving virtual reality landscape.

Improved training speed, as exemplified by the reduced time required to train large language models, directly translates to faster development cycles and increased productivity.

Impact on Scientific Disciplines

The impact of the EOS supercomputer and H100 GPUs extends across diverse scientific disciplines. In materials science, researchers can predict the properties of new materials, leading to the development of stronger, lighter, and more efficient materials. In astronomy, more accurate simulations of the universe can provide insights into the formation of galaxies and the evolution of stars. In healthcare, the speed and efficiency of drug discovery will increase significantly, accelerating the development of life-saving treatments.

Nvidia’s AI Hopper architecture H100 GPUs in the EOS supercomputer are seriously impressive, pushing the boundaries of what’s possible in high-performance computing. But did you know that even these groundbreaking machines might be inspired by the lucrative opportunities on Twitch? Creators are constantly looking for ways to boost their earnings, and programs like the Twitch monetization ad incentive program guest star twitch monetization ad incentive program guest star are a prime example.

Ultimately, the relentless pursuit of innovation in both realms – supercomputing and content creation – fuels a fascinating interplay.

Examples of Significant Impact

- Drug Discovery: The ability to analyze vast chemical databases to identify potential drug candidates more rapidly will revolutionize pharmaceutical research, accelerating the discovery of new treatments for diseases. This is a key example where the supercomputer’s capabilities will directly impact human health and well-being.

- Climate Modeling: More sophisticated climate models, trained on massive datasets, can provide more accurate predictions of future climate change impacts. This will enable scientists to develop more effective strategies for mitigating climate change.

- Materials Science: AI-powered simulations can predict the properties of new materials, potentially leading to the development of more efficient and sustainable materials for various applications. This example highlights the potential for innovation across multiple industrial sectors.

Future Directions and Considerations

The NVIDIA AI Hopper architecture, exemplified by the H100 GPU, represents a significant leap forward in supercomputing and AI. Its impact on scientific research and AI development is already evident, and its future potential is equally promising. This section explores potential advancements, ongoing research, future directions of supercomputing and AI, scaling challenges, and opportunities.The ongoing development of the AI Hopper architecture is driven by the ever-increasing demands of complex scientific simulations and AI tasks.

Researchers are constantly pushing the boundaries of computational power and efficiency, leading to iterative improvements in architecture and hardware design. This exploration delves into potential advancements and considerations for the future.

Potential Future Advancements

The AI Hopper architecture’s strength lies in its innovative design. Future iterations might leverage advancements in materials science for improved transistor performance, resulting in higher clock speeds and reduced power consumption. Quantum computing and AI are also increasingly intertwined, and the possibility of incorporating quantum computing capabilities within the architecture, although still speculative, holds immense potential. Furthermore, advancements in memory technologies are expected to significantly enhance the overall performance and efficiency of the system.

Ongoing Research and Development

Significant research is focused on improving the efficiency and effectiveness of AI algorithms and models. These efforts aim to reduce the computational burden while maintaining or even increasing accuracy and speed. Deep learning frameworks are constantly evolving, with researchers developing innovative techniques for training larger and more complex models. Research in novel hardware architectures for AI applications is also critical, driving the development of specialized chips and co-processors optimized for specific tasks.

Future Direction of Supercomputing and AI

The future of supercomputing is intertwined with the future of AI. The demands of complex scientific simulations, like climate modeling and drug discovery, require increasingly powerful supercomputers. AI will play a crucial role in managing and interpreting the vast datasets generated by these simulations. Expect specialized AI hardware to become more integrated with supercomputing systems, further accelerating the processing and analysis of complex data.

Scaling Challenges and Opportunities

Scaling the NVIDIA AI Hopper architecture presents significant challenges. Maintaining performance and efficiency as the number of cores and processing units increases is crucial. Efficient memory management and communication between different components of the system are essential for optimal performance. New techniques for data parallelism and task scheduling will be essential to overcome these challenges. These challenges also present significant opportunities.

Innovations in hardware design, software optimization, and novel algorithm development can lead to breakthroughs in fields like drug discovery, materials science, and climate modeling.

Summary of Potential Future Improvements

| Category | Potential Improvement | Impact |

|---|---|---|

| Hardware | Improved transistor materials, higher clock speeds, reduced power consumption | Increased performance, reduced energy costs |

| Architecture | Integration of quantum computing capabilities, specialized AI hardware co-processors | Enhanced problem-solving capabilities, accelerated specific AI tasks |

| Memory | Advanced memory technologies (e.g., 3D stacked memory) | Increased bandwidth, reduced latency |

| Software | Optimized AI algorithms, novel deep learning frameworks | Improved model accuracy, faster training times |

| Scalability | Advanced data parallelism techniques, improved task scheduling | Maintaining performance and efficiency as system scale increases |

End of Discussion

In conclusion, the NVIDIA AI Hopper architecture H100 GPU EOS supercomputer showcases a powerful combination of hardware and software advancements. Its performance benchmarks are impressive, and its impact on scientific research and AI development is undeniable. This cutting-edge system is poised to revolutionize various fields, driving innovation and pushing the boundaries of what’s possible in the future.