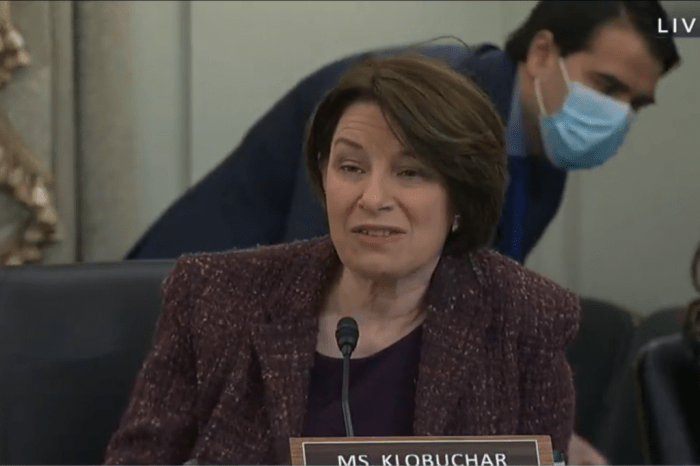

Klobuchar lummis algorithm bill section 230 misinformation teenager mental health – With the Klobuchar-Lummis algorithm bill, Section 230, misinformation, and teenager mental health at the heart of the debate, this post dives deep into the complex issues surrounding online content and its impact on young people. We’ll examine the proposed legislation, the pervasiveness of misinformation, and the potential mental health consequences for teenagers.

The bill aims to address the spread of misinformation, but its impact on social media platforms and teenagers’ online experiences remains uncertain. We’ll explore how algorithms influence content visibility, the current strategies for combating misinformation, and the role social media platforms play in moderating harmful content. Ultimately, the discussion centers on finding ways to protect teenagers online without stifling free expression.

Overview of the Klobuchar-Lummis Bill

The Klobuchar-Lummis bill, a significant piece of proposed legislation, aims to regulate the burgeoning cryptocurrency market in the United States. This comprehensive bill seeks to establish a regulatory framework for digital assets, addressing issues such as security, transparency, and consumer protection. It’s a complex piece of legislation with potential ramifications for various stakeholders.This bill, while addressing many important areas, has specific provisions that directly affect social media platforms, particularly concerning the spread of misinformation.

A key focus is Section 230, a section of the Communications Decency Act that historically shielded online platforms from liability for user-generated content. The proposed changes to Section 230 are intended to reshape the relationship between platforms and the content shared on their sites, aiming to balance freedom of speech with the responsibility to mitigate harmful content.

Key Provisions of Section 230

The bill proposes revisions to Section 230, intending to hold social media platforms accountable for content that violates certain laws, such as those concerning fraud, securities violations, or hate speech. This shift in responsibility would necessitate a more active role for platforms in monitoring and moderating user-generated content.

Intended Impact on Social Media Platforms

The bill’s proposed changes to Section 230 are anticipated to have a substantial impact on social media platforms. These platforms would face increased scrutiny and responsibility for the content shared on their sites. This includes the potential for legal action against platforms if they fail to adequately moderate harmful content. This increased liability could lead to changes in platform policies, content moderation practices, and even the types of content allowed.

For example, platforms might be compelled to invest more resources in content moderation, leading to potentially more stringent content guidelines.

Comparison of Potential Effects on Stakeholders

| Stakeholder | Potential Positive Effects | Potential Negative Effects |

|---|---|---|

| Social Media Platforms | Increased clarity on their responsibilities, potential for a more focused approach to content moderation, opportunity to demonstrate proactive measures against harmful content | Increased liability risks, potential for significant legal costs, pressure to invest more heavily in content moderation, and possible decrease in user engagement due to stricter content policies. |

| Content Creators | Potential for more accountability for harmful content, potentially greater clarity on the content allowed, and a reduction in the spread of misinformation. | Potential for content restrictions, difficulty navigating complex content moderation guidelines, and the potential for their content to be removed without prior notice. |

| Users | Potential for a safer online environment, protection against harmful content, and increased access to reliable information. | Potential for restrictions on freedom of expression, increased censorship, and a possible decrease in the availability of diverse viewpoints. |

| Investors | Increased transparency and stability in the digital asset market, potentially leading to a more favorable regulatory environment. | Potential uncertainty and regulatory risks associated with the proposed changes to Section 230, leading to decreased investment. |

Misinformation and Teenagers

Teenagers are particularly vulnerable to misinformation due to their developing cognitive abilities and tendency to rely on social media for information. Navigating the complex digital world often leads them to encounter fabricated or misleading content, impacting their understanding of crucial issues. This exploration delves into the pervasive nature of misinformation, its sources, and the potential consequences on adolescent well-being.

Prevalence of Misinformation Among Teenagers

Teenagers are heavily exposed to misinformation, often disseminated through social media platforms. This exposure can lead to confusion and the spread of false narratives. The immediacy and accessibility of social media platforms make them fertile ground for the rapid dissemination of misleading content. Studies indicate a significant portion of teenagers encounter and potentially believe false or misleading information online.

Potential Sources of Misinformation

Several factors contribute to the prevalence of misinformation among teenagers. Malicious actors, seeking to manipulate public opinion or exploit vulnerabilities, are a primary source. Intentional fabrication of information, often spread through fake accounts or bots, can be particularly effective. Misinformation can also arise from unintentional sources, such as flawed reporting or misinterpretations of complex topics. Inadequate fact-checking, coupled with the inherent difficulty in verifying online content, makes teenagers susceptible to misinformation.

Even well-meaning individuals can unintentionally share inaccurate information, amplifying its spread.

Potential Negative Impacts of Misinformation on Teenagers

Misinformation can have a wide range of detrimental effects on teenagers. It can erode trust in credible sources, leading to difficulties in discerning truth from falsehood. The spread of false information can affect decision-making, impacting choices regarding health, relationships, and even personal safety. Teenagers exposed to misinformation may experience anxiety, confusion, or emotional distress, especially if the information relates to sensitive topics.

It can also contribute to the spread of harmful stereotypes and prejudices.

Current Strategies for Combating Misinformation

Efforts to combat misinformation among teenagers often focus on media literacy education. This approach aims to equip teenagers with the skills to critically evaluate information, identify potential biases, and verify the authenticity of online content. Educational programs emphasize the importance of fact-checking and seeking diverse perspectives. Additionally, social media platforms are increasingly implementing measures to flag or remove misleading content.

This includes algorithms designed to detect and reduce the spread of misinformation.

Examples of Effective Interventions Targeting Teenagers

Various interventions show promise in combating misinformation among teenagers. Educational programs focusing on critical thinking and media literacy skills are crucial. These programs can be implemented in schools, community centers, or online. Interactive workshops and online resources can further enhance engagement and knowledge retention. Partnerships between educators, parents, and social media platforms are vital in developing and delivering effective interventions.

For example, a program that encourages teenagers to verify information from multiple sources before accepting it would significantly contribute to their ability to combat misinformation.

The Klobuchar-Lummis algorithm bill, tackling Section 230 misinformation and its impact on teenager mental health, is a complex issue. It’s interesting to consider how gaming loot boxes, like those in EA’s Star Wars Battlefront 2, an interview with Patrick Söderlund , illustrate the potential for algorithms to influence behavior and choices, ultimately affecting vulnerable populations. This discussion of algorithmic manipulation is key to understanding the broader implications of the bill.

Table Outlining Misinformation Types and Typical Targets

| Misinformation Type | Typical Targets | Examples |

|---|---|---|

| Health Misinformation | Teenagers seeking information about health issues, wellness trends, and lifestyle choices. | False claims about vaccination efficacy, exaggerated effects of dietary supplements, and misleading information about mental health conditions. |

| Political Misinformation | Teenagers actively engaging in political discussions, considering future political involvement, and forming opinions on current events. | False information about political candidates, fabricated news stories, and misleading information about elections. |

| Social Misinformation | Teenagers involved in social media interactions, building relationships, and maintaining their social standing. | False rumors about peers, misleading celebrity endorsements, and fake news related to social issues. |

Mental Health Implications

The digital age has brought unprecedented access to information, but this accessibility also presents a significant challenge to teenagers’ mental well-being. Misinformation, often rampant on social media platforms, can have a profound and detrimental impact on their emotional and psychological development. This discussion delves into the complex relationship between misinformation and mental health, exploring the ways social media use affects teenagers and the potential consequences of exposure to false or misleading content.The pervasiveness of misinformation online, coupled with the emotional vulnerabilities of teenagers, creates a perfect storm for mental health issues.

This is not simply a theoretical concern; real-life examples show the harmful effects of misleading information on adolescents. Understanding these impacts is crucial for developing effective strategies to protect teenagers’ mental health in the digital sphere.

Correlation Between Misinformation and Mental Health Issues

Teenagers are particularly susceptible to the influence of misinformation, often lacking the critical thinking skills to discern accurate information from fabricated content. This vulnerability can lead to a cascade of negative mental health outcomes, including anxiety, depression, and even self-harm. The constant barrage of often conflicting and misleading information online can contribute to feelings of stress, confusion, and isolation.

Social Media Use and Teen Mental Well-being

Social media platforms, while offering opportunities for connection and engagement, can also negatively impact teenagers’ mental well-being. The pressure to present a perfect online persona, often fueled by filtered images and curated content, can lead to feelings of inadequacy and low self-esteem. Constant exposure to potentially harmful information, including misinformation, can trigger emotional distress and exacerbate pre-existing mental health conditions.

Furthermore, the constant comparison to others’ online lives can contribute to social anxiety and depression.

The Klobuchar-Lummis algorithm bill, specifically Section 230 and its impact on misinformation, is definitely a hot topic right now, especially regarding teenager mental health. It’s a complex issue, and finding the right balance is crucial. Thinking about how easy it is to access information online, and considering the potential impact on a younger audience, makes you wonder. Looking for a new laptop for yourself or a student?

Check out the black friday laptop deals cyber monday best surface pro hp dell lg apple macbook for some great options. Ultimately, navigating the digital landscape safely and responsibly is key, whether it’s a laptop or online content.

Examples of Misinformation Harming Mental Health

Misinformation about body image, mental health conditions, and social issues can have a devastating impact on teenagers’ self-perception and overall well-being. For instance, false or misleading information about eating disorders can fuel unhealthy eating habits and body dissatisfaction. Similarly, misinformation about mental health conditions can stigmatize these issues and discourage teenagers from seeking help. Spread of harmful conspiracy theories, even if presented as jokes, can have detrimental impacts on a person’s mental health and perception of reality.

Potential Mental Health Consequences for Teenagers

Exposure to misinformation can result in a range of negative mental health consequences. These consequences can range from mild distress to severe conditions requiring professional intervention. Examples include: anxiety, depression, eating disorders, sleep disturbances, and even suicidal ideation. The long-term effects of repeated exposure to misinformation are still being researched, but early indicators suggest a correlation between online misinformation exposure and increased risk for mental health issues.

Impact of Different Types of Online Content

The impact of different types of online content on mental health varies. While engaging content like educational videos can be beneficial, harmful content such as cyberbullying, misleading health information, and misinformation about social issues can significantly harm mental well-being. The potential for misinformation to spread quickly and widely on social media platforms exacerbates its negative impact on teenagers.

Potential Symptoms of Mental Health Issues Stemming from Misinformation Exposure

| Symptom Category | Potential Symptoms |

|---|---|

| Emotional | Anxiety, fear, sadness, irritability, mood swings, hopelessness |

| Behavioral | Withdrawal, changes in sleep patterns, changes in eating habits, difficulty concentrating, increased social isolation |

| Physical | Headaches, stomach aches, fatigue, muscle tension |

| Cognitive | Difficulty with decision-making, distrust of information, paranoia, distorted thinking |

The Algorithm’s Role

Algorithms are the silent architects of the online world, shaping what we see and how we interact with information. They are essentially sets of rules designed to process and filter data, and this filtering process plays a crucial role in shaping the online experience, particularly for teenagers. This influence extends far beyond simply organizing content; algorithms actively curate and prioritize what information users are exposed to, often with unintended consequences.Algorithms are powerful tools that can influence the spread of misinformation, impacting the mental well-being of teenagers.

Understanding their function is crucial to navigating the digital landscape responsibly.

Algorithmic Influence on Misinformation

Algorithms are inherently prone to biases in their datasets and programming. These biases can amplify misinformation by prioritizing certain types of content over others. This is often done based on user engagement metrics like clicks, shares, and comments, which can incentivize the platform to prioritize content that is inflammatory or sensationalist, even if factually incorrect. A cycle of engagement can create a feedback loop that reinforces misleading information.

Algorithmic Impact on Teenagers’ Content Consumption

Algorithms heavily influence what content teenagers encounter. Their personalized feeds are curated to display content deemed most relevant to their past behavior. This tailored approach, while convenient, can also create echo chambers, exposing teenagers to only viewpoints that align with their existing beliefs. The absence of diverse perspectives can hinder critical thinking skills and potentially lead to confirmation bias.

Examples of Algorithmic Promotion and Suppression

Numerous examples illustrate how algorithms can promote or suppress specific content. News articles, for instance, might be prioritized based on engagement metrics rather than journalistic merit. A story with inflammatory language or provocative images might gain prominence over a factual, nuanced report. Conversely, certain viewpoints or perspectives might be de-prioritized, effectively suppressing their visibility. The algorithm’s bias toward trending topics could lead to the underrepresentation of slower-moving, but vital, discussions.

Bias Embedded in Algorithms

Algorithmic biases often stem from the data they are trained on. If this data reflects existing societal biases, the algorithm will likely perpetuate and even amplify those biases. For instance, if an algorithm is trained on a dataset that predominantly features content from a specific demographic, it might inadvertently favor content that aligns with that demographic’s perspectives. This bias could disproportionately affect other groups, potentially creating unequal access to information and reinforcing existing inequalities.

Strategies to Mitigate Algorithmic Impact

Several strategies can help mitigate the impact of algorithms on teenagers. Educational initiatives can equip teenagers with the critical thinking skills to evaluate online content. Transparency from social media platforms regarding their algorithmic processes could help teenagers understand how their feeds are constructed. Promoting diverse perspectives and encouraging teenagers to actively seek out different viewpoints can help break echo chambers.

Comparison of Algorithmic Approaches

| Algorithmic Approach | Description | Implications for Teenagers |

|---|---|---|

| Content-based filtering | Algorithms identify and categorize content based on s, themes, or other characteristics. | Can create echo chambers, potentially exposing teenagers to limited viewpoints. May miss nuanced or relevant information not directly matching the initial criteria. |

| Collaborative filtering | Algorithms recommend content based on the preferences of similar users. | Can reinforce existing preferences and limit exposure to new ideas or perspectives. May perpetuate existing biases or misunderstandings. |

| Hybrid approaches | Algorithms combining content-based and collaborative filtering techniques. | Potentially offer a balance between personalized recommendations and exposure to diverse viewpoints, but can still perpetuate biases if the underlying data is flawed. |

Section 230 and Mental Health: Klobuchar Lummis Algorithm Bill Section 230 Misinformation Teenager Mental Health

Section 230 of the Communications Decency Act has been a cornerstone of online platforms’ operation for years, granting them significant immunity from liability for user-generated content. However, as the internet’s role in shaping our mental health becomes more pronounced, concerns are rising about how Section 230 affects the responsibility of platforms and their ability to mitigate harm. This discussion delves into the complex relationship between Section 230, platform responsibility, and the potential impact on mental health.The legal framework established by Section 230, while intending to foster the free exchange of ideas online, has led to a significant debate regarding the extent of platform responsibility.

The Klobuchar-Lummis algorithm bill, aiming to tackle Section 230 misinformation, is definitely a hot topic, especially when considering its impact on teenager mental health. It’s a complex issue, and while the proposed solutions seem promising, the practical application is still uncertain. Meanwhile, the struggles of AI in everyday tasks, like the Amazon Astro robot’s inability to fetch your beer, highlight how far we are from truly helpful automated solutions.

amazon astro robot cannot fetch your beer Ultimately, these technological and societal challenges highlight the need for careful consideration in crafting laws regarding online content and its effect on youth.

This immunity has allowed platforms to operate with a degree of autonomy, particularly concerning content moderation. However, the increasing evidence linking online content to mental health issues, particularly among teenagers, is prompting calls for greater accountability. This section analyzes the implications of Section 230 changes on the platforms’ capacity to address these concerns.

Relationship of Section 230 to Platform Responsibility, Klobuchar lummis algorithm bill section 230 misinformation teenager mental health

Section 230 shields online platforms from liability for content posted by users. This means platforms are generally not held responsible for the harmful or misleading information shared on their sites, unless they actively participate in creating or distributing that content. The law’s intent was to foster the free flow of information, encouraging the development of online communities and services.

However, critics argue that this immunity has incentivized platforms to prioritize user growth and engagement over the well-being of their users.

Potential Impact of Section 230 Changes on Mental Health

Changes to Section 230 could significantly affect mental health outcomes. For instance, holding platforms more accountable for harmful content could incentivize them to invest more in robust content moderation and safety measures. Conversely, overly stringent regulations could stifle online discourse and limit access to vital information. The balance between freedom of expression and the need to protect vulnerable users remains a critical challenge.

Role of Platforms in Moderating Content

Platforms play a crucial role in moderating content, acting as gatekeepers in the digital world. This responsibility extends beyond simply hosting user-generated content; it includes actively identifying and addressing potentially harmful content. This responsibility is not universally accepted or implemented.

Examples of How Platforms Currently Handle Harmful Content

Current content moderation strategies vary widely across platforms. Some platforms employ automated systems to identify potentially harmful content, while others rely on human moderators. Examples include:

- Automated Content Filtering: Algorithms are employed to detect hate speech, misinformation, and other problematic content. The effectiveness of these algorithms can vary depending on the sophistication of the algorithm and the types of content being targeted.

- Human Moderation: Human moderators review content flagged by automated systems or reported by users. This process can be time-consuming and resource-intensive. Consistency and effectiveness in human moderation are crucial.

- Community Guidelines: Platforms establish community guidelines to inform users about acceptable content and behavior. These guidelines can vary significantly, impacting how different platforms address harmful content.

Strategies for Platforms to Improve Content Moderation

Improving content moderation requires a multifaceted approach.

- Enhanced Algorithms: More sophisticated algorithms are needed to identify and flag harmful content more accurately and efficiently. This includes incorporating factors like context and intent.

- Improved Training and Resources for Moderators: Providing human moderators with better training and resources can improve the quality and consistency of their decisions. This includes training in mental health awareness and cultural sensitivity.

- Transparency and Accountability: Platforms should be transparent about their content moderation policies and procedures. This transparency fosters accountability and trust.

Current Responsibilities of Platforms Under Section 230

| Platform Responsibility | Description |

|---|---|

| Content Hosting | Platforms are responsible for hosting user-generated content. |

| Content Moderation (Limited) | Platforms have a limited responsibility to moderate content that is illegal or violates their terms of service. |

| User Account Management | Platforms are responsible for managing user accounts and enforcing their terms of service. |

| Liability (Generally Limited) | Section 230 generally protects platforms from liability for user-generated content. |

Impact on Teenagers’ Online Behavior

Navigating the digital world is increasingly complex, and teenagers, especially, are profoundly shaped by the online spaces they inhabit. The proposed Klobuchar-Lummis bill, with its potential implications for social media platforms, will undoubtedly influence how teenagers engage with these platforms. Understanding the potential shifts in online behavior is crucial for preparing both teenagers and parents for the changes ahead.This analysis delves into how the bill might alter teenagers’ online behavior, examining potential adaptations and the differing responses across demographic groups.

We will also explore strategies to support teenagers during this transition. The goal is to equip readers with a better understanding of the challenges and opportunities presented by the legislation’s impact on teenage online interactions.

Potential Shifts in Social Media Engagement

The proposed changes to Section 230, especially regarding content moderation and platform accountability, are likely to reshape how teenagers interact with social media. This is not simply about censorship; it’s about the responsibility platforms take for the content they host. Teenagers are not immune to this shift in the online landscape.

- Increased Scrutiny of Content: Teenagers may become more cautious about the content they share, recognizing that platforms will have a heightened level of oversight. This could lead to a more filtered, and potentially less authentic, online presence. They might be more aware of the potential repercussions of sharing opinions or experiences.

- Shift in Content Creation: With increased accountability, teenagers might alter their content creation strategies. They could become more mindful of the potential consequences of posting sensitive or controversial information, potentially leading to a decrease in the sharing of emotionally charged or vulnerable content. Conversely, they might focus on creating content that is less likely to attract negative attention.

- Alternative Platforms and Communities: Teenagers, known for their adaptability, might seek out alternative platforms or create their own communities to share content and interact with peers. This could lead to a fragmentation of online spaces and a search for more niche communities, especially if they feel their preferred platforms have become too restrictive.

Demographic Variations in Responses

Teenagers’ responses to the proposed changes will likely vary based on factors such as socioeconomic status, access to technology, and cultural background.

- Socioeconomic Factors: Teenagers from lower socioeconomic backgrounds might be disproportionately affected by the legislation’s impact on platform access and functionality. If certain platforms become less accessible or require financial commitments, this could exacerbate existing inequalities.

- Cultural Background: Teenagers with diverse cultural backgrounds might have different expectations and experiences with online interactions. Changes in platform policies might impact the way they communicate and interact with their peers and family, particularly if the policies conflict with cultural norms.

- Access to Technology: Teenagers with limited access to technology or reliable internet connections may face greater challenges in adapting to the new regulations. This could lead to further digital divides and limit their ability to engage in online communities.

Supporting Teenagers Through the Changes

Strategies to support teenagers during this transition include fostering open communication about online safety and platform usage, providing access to resources that help them navigate the new regulations, and promoting digital literacy skills.

- Open Communication: Parents and educators should initiate open dialogues with teenagers about the implications of the legislation, encouraging them to ask questions and express concerns.

- Educational Resources: Schools and community organizations can provide educational resources that help teenagers understand their rights and responsibilities online, and help them navigate the potential challenges of the new regulations.

- Digital Literacy Programs: Investing in digital literacy programs can help teenagers adapt to changing platforms and develop critical thinking skills for evaluating online information. This is particularly important for helping them differentiate credible sources from misinformation.

Potential Shifts in Online Behavior

| Aspect of Online Behavior | Potential Shift (with Legislation) |

|---|---|

| Content Sharing | More cautious, filtered, less authentic |

| Content Creation | More mindful of consequences, potentially less emotionally charged |

| Platform Choice | Seek alternative platforms, create niche communities |

| Impact on Demographics | Potentially exacerbate existing inequalities for low-income teenagers |

Illustrative Case Studies

Misinformation, particularly when targeted at vulnerable populations like teenagers, can have devastating consequences. Understanding how these issues manifest and how legislation like the Klobuchar-Lummis bill might mitigate them is crucial. This section presents a hypothetical case study to illustrate these points.

Hypothetical Case Study: The “Perfect Body” Illusion

A 15-year-old girl, Sarah, becomes increasingly obsessed with social media images portraying unrealistic beauty standards. She starts consuming content promoting extreme dieting and exercise routines, fueled by misinformation spread through influencer posts and online forums. This misinformation, portraying unrealistic body image ideals and dangerous methods, leads to a decline in her mental well-being. She experiences anxiety, depression, and disordered eating behaviors.

Her self-esteem plummets, and she isolates herself from friends and family.

“The proliferation of misinformation online, particularly regarding body image and health, poses a serious threat to the mental well-being of teenagers.”

“The Klobuchar-Lummis bill aims to hold social media platforms accountable for the spread of harmful misinformation and disinformation.”

Potential Impact of the Klobuchar-Lummis Bill

The Klobuchar-Lummis bill, by mandating greater transparency and accountability for social media companies, could potentially address Sarah’s situation. By requiring platforms to identify and flag potentially harmful content, it could curb the spread of misinformation promoting unrealistic body image ideals. The bill also encourages platforms to implement content moderation policies, possibly including measures for age-appropriate content, and to provide resources for teenagers struggling with these issues.

This could involve collaborations with mental health professionals or educational organizations.

Potential Positive Outcomes

Reduced exposure to harmful misinformation, like the unrealistic body image standards, could lead to a significant improvement in Sarah’s mental well-being. Increased transparency and moderation could create a safer online environment, enabling her to engage in more positive and supportive interactions. Access to resources through partnerships with mental health organizations could provide crucial support for teenagers like Sarah, empowering them to challenge misinformation and develop healthier coping mechanisms.

| Aspect | Case Study (Sarah) | Potential Impact of Klobuchar-Lummis Bill |

|---|---|---|

| Misinformation | Exposure to misinformation about body image leads to negative mental health effects. | The bill could reduce exposure to such misinformation by requiring platforms to flag or remove harmful content. |

| Platform Responsibility | Social media platforms are not held accountable for the spread of harmful content. | The bill holds platforms accountable, requiring them to implement policies to address the spread of misinformation. |

| Teenager’s Mental Health | Sarah experiences anxiety, depression, and disordered eating. | Reduced exposure to misinformation and access to support resources could improve her mental health. |

Ending Remarks

In conclusion, the Klobuchar-Lummis Bill presents a crucial opportunity to navigate the complex interplay between online content, algorithms, and teenager mental health. While the bill seeks to address misinformation, its effects on various stakeholders are multifaceted and warrant careful consideration. The potential consequences for teenagers, from altered online behavior to mental health implications, demand a nuanced approach. This discussion highlights the urgent need for a balanced approach that protects young people without hindering the vital role of online platforms.