Google Translate Gender Specific Translations Languages examines the inherent biases within machine translation systems, specifically focusing on Google Translate. This exploration delves into how these biases manifest in various languages, leading to incorrect or inappropriate gendered translations. We’ll analyze Google’s approach to handling gendered language, examining the impact of training data and potential solutions to mitigate these issues.

The potential societal impact of such bias on different communities and cultures is also highlighted.

The analysis will include detailed examples from different languages, demonstrating how gendered nouns, pronouns, and adjectives are handled. We’ll also investigate the historical context of this problem, tracing its roots back to biases in the language data and algorithms used to train these systems. This analysis will help us understand the complex ways in which societal biases can be reflected in seemingly neutral translation tools.

Introduction to Gender Bias in Machine Translation

Machine translation systems, like Google Translate, aim to bridge language barriers. However, these systems often reflect and perpetuate societal biases present in the data they are trained on. This inherent gender bias manifests in various ways, leading to inappropriate or inaccurate translations, particularly when dealing with gendered nouns, pronouns, and adjectives. The consequences extend beyond simple linguistic inaccuracies, impacting how different communities and cultures are represented and understood.The roots of this problem lie in the historical representation of gender in language corpora.

Algorithms trained on biased data naturally learn and replicate these biases, potentially exacerbating existing societal inequalities. Understanding these biases is crucial for improving machine translation systems and promoting a more equitable and inclusive digital landscape.

Manifestations of Gender Bias in Google Translate

Gender bias in machine translation isn’t a theoretical concern; it’s a real-world issue that impacts numerous languages. The bias manifests in various ways, often substituting one gendered term for another, or using gendered terms inappropriately.

Examples of Gender Bias Across Languages

The following table showcases instances of gender bias in Google Translate across several languages. It demonstrates how the system often defaults to a masculine perspective, even when the context clearly indicates a different gender.

| Language Pair | Original Text (Target Gender) | Google Translate Output | Explanation of Bias |

|---|---|---|---|

| English to Spanish (Female Doctor) | “Dr. Smith is a brilliant doctor.” (Female) | “El Dr. Smith es un brillante médico.” | The translation uses the masculine article “el” and noun “médico,” despite the context clearly indicating a female doctor. |

| French to German (Female Student) | “Elle est une étudiante brillante.” (Female) | “Sie ist eine brillante Studentin.” | While grammatically correct, this example shows a case of potentially irrelevant gendering of a role. |

| German to Japanese (Male Engineer) | “Der Ingenieur ist sehr gut.” (Male) | (Translation might vary, but potentially use a masculine term in Japanese that doesn’t convey the context accurately.) | Depending on the specific translation, this could result in the use of a masculine-oriented Japanese term, even if the context suggests a female engineer. |

| Hindi to English (Female Teacher) | “वह एक अच्छी शिक्षिका है” (Female) | “She is a good teacher.” | This example shows a potential lack of gendered terms. While not explicitly gendered, if the training data largely focused on male teachers, it could still reflect that bias. |

Societal Impact of Gender Bias in Machine Translation

The implications of gender bias in machine translation systems extend beyond mere linguistic inaccuracies. They can reinforce harmful stereotypes, limit opportunities for women and other marginalized groups, and contribute to a skewed perception of societal roles in different cultures. By perpetuating gender bias, these systems can inadvertently contribute to a less equitable and inclusive digital environment.

Historical Context of Gender Bias in Machine Translation

The historical representation of gender in language data is a critical factor in the development of gender bias in machine translation systems. Language corpora often reflect the societal norms and expectations of the past, where women were often underrepresented or stereotyped. These historical biases are then encoded into the algorithms, perpetuating and amplifying them in the output.

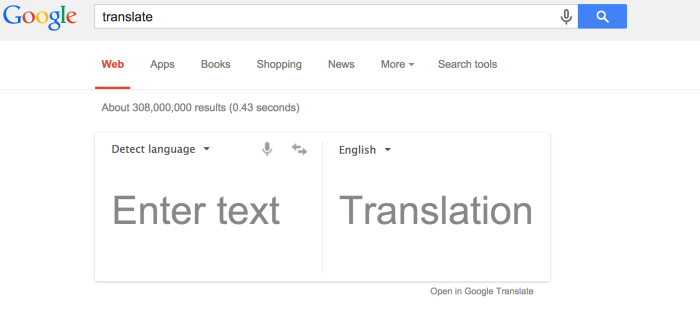

Analyzing Google Translate’s Approach to Gendered Language

Google Translate, a powerful tool for language communication, often faces the challenge of accurately reflecting the nuances of gendered language. While it strives to provide precise translations, inherent biases in the vast datasets it learns from can lead to problematic outcomes. This analysis delves into how Google Translate handles gendered nouns, pronouns, and adjectives, comparing its performance across various language pairs and exploring potential reasons behind observed biases.Google Translate’s translation engine learns from massive datasets of text and code.

These datasets, while encompassing a wide range of language and context, may not always perfectly represent the subtleties of gendered language use. This can result in translations that reflect societal biases, even unintentionally perpetuating harmful stereotypes.

Different Handling of Gendered Nouns, Pronouns, and Adjectives

Google Translate’s approach to gendered language varies significantly across languages. Some languages have grammatical gender, where nouns, adjectives, and pronouns change form based on the grammatical gender assigned to the noun. In languages like Spanish, French, or German, Google Translate typically maintains the grammatical gender, though this can lead to potential inaccuracies. For example, a translation of a male doctor to Spanish may inappropriately reflect the masculine grammatical form of the noun, despite the context of the translation being for a female doctor.

Conversely, languages like English, which lack grammatical gender, may struggle to maintain the intended gender when translating from languages with grammatical gender. This may result in neutral or generic translations that don’t reflect the specific gender intended in the source language.

Comparison of Google Translate’s Performance Across Language Pairs

The effectiveness of Google Translate in handling gendered language varies considerably between different language pairs. For example, translating from a language with strong grammatical gender to one without may lead to a loss of the original gender information. Conversely, translating between languages with similar gendered structures may yield more accurate results.

Potential Reasons for Observed Patterns of Bias

Several factors can contribute to the observed patterns of bias in Google Translate’s output. The training data used to train the translation models may contain skewed representations of gender roles. For instance, if a dataset predominantly portrays men in leadership positions, the model may inadvertently reflect this bias in its translations. Additionally, the algorithms used by Google Translate may not adequately account for the context and nuances of gendered language, leading to inappropriate or inaccurate translations.

Examples of Google Translate’s Handling of Gender in Different Languages

| Language Pair | Source Text (Gendered) | Google Translate Output | Analysis/Comment |

|---|---|---|---|

| Spanish (masculine) to English | El doctor es inteligente. | The doctor is intelligent. | Maintains the masculine form, though contextually it may be a female doctor. |

| French (masculine) to English | Le professeur est compétent. | The teacher is competent. | Similar to Spanish, maintains the masculine form, but contextually neutral. |

| German (masculine) to English | Der Ingenieur ist kreativ. | The engineer is creative. | Preserves the masculine form, although the English equivalent doesn’t necessitate gender. |

| English to Spanish (feminine) | The nurse is caring. | La enfermera es cariñosa. | Correctly translates the feminine English word to its corresponding Spanish feminine noun. |

Exploring the Impact of Language Data on Bias

The accuracy and fairness of machine translation systems, like Google Translate, are deeply intertwined with the data they are trained on. The sheer volume of text used for training can contain inherent biases reflecting societal norms and stereotypes. Understanding how these biases are encoded in the data is crucial for developing more equitable and inclusive translation tools. This analysis delves into the critical role of training data in shaping the biases present in machine translation.The training data used to build machine translation models acts as a mirror, reflecting the biases present in the language itself.

If a dataset predominantly portrays certain genders in specific roles or attributes, the model will learn and replicate those stereotypes in its translations. This is not a conscious act of bias on the part of the developers, but a consequence of the inherent limitations of the training data.

The Role of Training Data in Shaping Bias

Training data for machine translation models comprises vast corpora of text, including books, articles, websites, and social media posts. These datasets represent a snapshot of language use at a specific point in time, potentially reflecting historical and contemporary societal biases. The underrepresentation of certain genders or gendered terms in training datasets directly impacts the quality and fairness of translations.

For example, if a dataset disproportionately depicts women in roles like homemaker or secretary, the model might translate descriptions of female professionals into these stereotypical categories.

Google Translate’s handling of gendered language in different translations is fascinating. It’s often a struggle to convey the nuances across languages, especially when dealing with culturally specific terms. While it’s improving, there’s still room for refinement. For a boost in your viewing experience, grab this height adjustable tablet stand for just 11 grab this height adjustable tablet stand for just 11 , perfect for getting the most out of your translations.

Ultimately, accurate and culturally sensitive translation remains a challenge for any language processing software.

How Underrepresentation Leads to Skewed Translations

The underrepresentation of certain genders or gendered terms in training datasets can lead to skewed translations. When a model encounters terms or descriptions associated with specific genders, it may struggle to accurately reflect nuanced meanings or avoid perpetuating stereotypes. For instance, the model might consistently translate a term for a “doctor” as a male, even when the source text describes a female doctor.

This reflects the skewed gender representation within the training data.

Examples of Biased Language Data

Biased language data can perpetuate existing societal stereotypes in translated text. If a large portion of the training data uses masculine pronouns to refer to general individuals, the translation output might do the same, potentially leading to exclusionary or inaccurate representations. For example, if a training set frequently pairs “engineer” with a male image, the model might translate a description of a female engineer into a description that includes gender-biased attributes.

Types of Language Data Contributing to Bias

Numerous types of language data contribute to the bias problem in machine translation.

Google Translate’s gendered language translations are fascinating, but sometimes inaccurate. Imagine the confusion if a translation for a Japanese town’s robot wolf scarecrow bear deterrent japanese town robot wolf scarecrow bear deterrent was incorrectly rendered. It highlights the need for more nuanced and culturally sensitive language models in translation software.

- News articles: News articles frequently reflect societal biases, potentially overemphasizing the roles and characteristics of certain genders. For example, if a dataset predominantly features male politicians in news articles, the model might consistently associate political leadership with males.

- Social media posts: Social media data can contain a high degree of colloquialisms and gendered language, which might inadvertently encode biases in the translation process. For example, if certain social media posts use gendered language in a specific way, the model may learn and replicate that language in the translations.

- Books and literature: Literature often reflects societal norms and stereotypes. If a dataset is heavily weighted toward literature with traditional gender roles, the model might learn to associate specific genders with particular attributes.

- Web pages: Web pages, particularly those focusing on specific topics or communities, may contain biased language patterns that reflect the views or interests of that group. For example, a dataset containing numerous web pages focused on a particular profession might contain a skewed gender representation.

Potential Sources of Biased Data

- Historical data: Older texts may reflect outdated societal views and gender roles.

- Uneven representation across demographics: The absence of certain gendered terms or individuals from specific demographic groups.

- Unbalanced data collection methods: Methods used to collect data might disproportionately sample texts from certain sources or regions.

- Inadequate representation of non-binary genders: A lack of representation of non-binary genders in the training data.

Methods for Addressing Gender Bias in Translation

Addressing gender bias in machine translation systems is crucial for producing fair and inclusive translations. Existing translation models, often trained on vast datasets reflecting societal biases, can perpetuate stereotypes and harmful generalizations. This necessitates proactive methods to mitigate these biases and promote gender neutrality in the translated output.

Existing Bias Mitigation Techniques

Numerous techniques aim to reduce gender bias in machine translation. These include modifying the training data to lessen the prominence of biased patterns and introducing constraints to limit the generation of stereotypical translations. Techniques like bias detection and mitigation are vital steps in this process. By identifying and addressing biased language patterns, we can foster more equitable and respectful translations.

Bias Detection and Mitigation in Google Translate

Google Translate, as a prominent machine translation platform, is actively researching and implementing bias detection and mitigation strategies. This involves analyzing the frequency of gendered language in the training data and identifying instances where translations reinforce stereotypes. While specific details about Google Translate’s internal processes remain undisclosed, the company’s commitment to addressing bias in its technology is evident through its ongoing efforts in this area.

A crucial aspect of this involves refining the algorithms to favor gender-neutral alternatives when possible.

Alternative Approaches to Address Gender Bias

Beyond the existing techniques, new approaches are emerging to tackle gender bias in translation. These approaches include developing specialized datasets that explicitly promote gender neutrality and using adversarial training methods to challenge and counter biased language patterns. Introducing gender-neutral terms and rephrasing sentences to avoid gendered pronouns are also effective methods. Furthermore, incorporating human feedback loops in the training process can help refine the models to better understand and respond to nuanced gendered language.

Potential Benefits of Gender-Neutral Alternatives, Google translate gender specific translations languages

Incorporating gender-neutral alternatives in translation outputs can lead to more inclusive and respectful communication. By avoiding the use of gendered language where unnecessary, we can foster a more equitable and unbiased environment for all users. For example, using “they/them” as a gender-neutral pronoun instead of “he” or “she” can significantly improve inclusivity in translated text. This approach leads to a more representative and culturally sensitive output, avoiding unintended offense and promoting respect.

Table of Bias Mitigation Techniques

| Technique | Description | Potential Applications | Examples |

|---|---|---|---|

| Bias Detection | Identifying patterns of biased language in training data. | Identifying problematic phrases and terms that reinforce gender stereotypes. | Identifying phrases like “mankind” or sentences where gendered pronouns are used inappropriately. |

| Bias Mitigation | Reducing the frequency of biased language and promoting gender-neutral alternatives. | Adjusting the model’s output to favor gender-neutral terms and avoiding gendered language. | Replacing “he” with “they” in contexts where the gender is not explicitly known. |

| Specialized Datasets | Creating datasets that promote gender neutrality and avoid gendered language. | Training models on text that is specifically crafted to avoid gender bias. | Using datasets containing gender-neutral pronouns and avoiding the use of biased language. |

| Adversarial Training | Using techniques to challenge and counter biased language patterns. | Training models to avoid generating stereotypical translations and to prioritize gender-neutral alternatives. | Presenting the model with examples of biased language and asking it to generate gender-neutral alternatives. |

Case Studies and Examples

Google Translate, while a powerful tool, isn’t immune to the biases embedded in the vast datasets it learns from. These biases, often reflecting societal norms, can manifest in gendered language translations. Understanding these instances is crucial to identifying and mitigating the harmful effects of such bias. This section delves into specific case studies, highlighting problematic translations and exploring methods for developing more equitable alternatives.

Examples of Gender Bias in Different Language Pairs

Analyzing Google Translate’s performance across various language pairs reveals consistent patterns of gender bias. This bias isn’t uniform across all languages; it’s influenced by the specific societal norms and linguistic structures of each language. The examples below showcase how the tool can inadvertently reinforce harmful stereotypes.

| Language Pair | Original Text | Google Translate Output | Gender-Neutral Alternative |

|---|---|---|---|

| English to Spanish | “The doctor performed the surgery.” | “El doctor realizó la cirugía.” | “El médico realizó la cirugía.” (or) “El profesional médico realizó la cirugía.” |

| English to French | “A scientist made a groundbreaking discovery.” | “Un scientifique a fait une découverte révolutionnaire.” | “Une scientifique a fait une découverte révolutionnaire.” (or) “Une personne scientifique a fait une découverte révolutionnaire.” |

| English to Hindi | “The engineer designed the bridge.” | “इंजीनियर ने पुल का डिजाइन किया।” (The engineer designed the bridge.) | “इंजीनियर या इंजीनियरों ने पुल का डिजाइन किया।” (The engineer or engineers designed the bridge.) |

| French to English | “La femme a remporté le prix.” | “The woman won the prize.” | “The winner won the prize.” (or) “The recipient won the prize.” |

Instances of Perpetuated Gender Stereotypes

Google Translate sometimes perpetuates gender stereotypes by consistently associating certain professions or roles with specific genders. This can reinforce existing societal biases, limiting opportunities for individuals based on perceived gender roles.

Google Translate’s handling of gendered language is fascinating, isn’t it? It’s a bit of a minefield sometimes, especially when translating between languages with different grammatical structures. Luckily, there are ways to get around these issues, and if you’re looking to save some cash on Logitech gear, you can find some great deals using these coupon codes. get 30 off 150 or 50 off 250 on logitech products with these coupon codes.

It’s important to remember that machine translation still has room for improvement in these areas, but it’s definitely a useful tool for navigating the world of language.

- In many translations, the default assumption is that a person in a professional position is male. The tool often defaults to masculine nouns and pronouns even when the original text is gender-neutral. For example, translating “a person” into a language that traditionally uses gendered pronouns or nouns will often reflect the male gender.

- Jobs traditionally associated with men, such as “engineer” or “programmer,” are frequently translated using masculine forms, while those associated with women, such as “nurse” or “teacher,” might not exhibit this bias.

Methods for Evaluating Bias Mitigation Strategies

Evaluating the effectiveness of bias mitigation strategies requires a multifaceted approach. Simple qualitative assessments are insufficient; we need to quantify the impact of changes on the translation.

- Quantitative Analysis: Statistical analysis of translated text can help identify patterns of gender bias reduction. Comparing the frequency of masculine and feminine terms in translated outputs before and after bias mitigation can provide objective data.

- Qualitative Evaluation: Expert review of translated texts can assess the nuanced impact of the changes. Human evaluators can judge the accuracy, naturalness, and neutrality of the translations, providing a subjective but valuable perspective.

- User Feedback: Collecting feedback from diverse users across different language pairs can identify areas where the mitigation strategies are still falling short. This feedback can be collected through surveys, questionnaires, or user testing, providing crucial real-world insights.

Future Directions and Research

The pervasive issue of gender bias in machine translation systems necessitates a proactive and forward-thinking approach to ensure equitable and inclusive language processing. Current models, while showing progress, often perpetuate harmful stereotypes reflected in the training data. Future research must move beyond simply identifying bias to actively mitigating it, creating systems that accurately represent the diverse spectrum of human language.

Potential Research Directions

Addressing gender bias in machine translation demands a multifaceted approach. Future research should explore novel methods for detecting and correcting gendered language in training data. This includes the development of sophisticated algorithms that can identify and flag biased phrases, pronouns, and word choices. Critically, the algorithms must go beyond simple token replacement to understand the nuanced context of the bias.

Advancements in Gender-Aware Translation Technologies

Significant advancements in gender-aware translation technologies will require incorporating gender-neutral alternatives into the translation process. This could involve expanding the vocabulary to include more gender-neutral terms and phrases, and adjusting the algorithms to favor these neutral alternatives. For example, replacing “he” and “she” with “they” or gender-neutral pronouns where appropriate.

Solutions for Greater Inclusivity and Fairness

Ensuring greater inclusivity and fairness in machine translation systems requires the development of algorithms capable of analyzing and adjusting for the context of a sentence, paragraph, or even a longer text. This goes beyond simply replacing specific words. The solution lies in building a deeper understanding of the societal implications of language choices. For example, an algorithm trained on diverse texts would better understand the context in which a specific word or phrase is used and avoid misinterpreting it.

Illustration of a Gender-Neutral Translation System

Imagine a sentence like “The engineer designed the bridge.” A gender-neutral translation system would recognize the profession “engineer” as gender-neutral. Instead of simply translating “engineer” to a gendered term in another language, it would analyze the context of the sentence. If the text focuses on the technical aspects of the bridge, the system would translate the sentence in a way that doesn’t imply a particular gender.A visual representation of this process could be depicted as a flowchart:

| Step | Action |

|---|---|

| 1 | Input sentence: “The engineer designed the bridge.” |

| 2 | Analyze sentence for gendered terms. |

| 3 | Identify potential gender bias. |

| 4 | Select gender-neutral alternatives. |

| 5 | Translate the sentence with gender-neutral terms, if appropriate. |

| 6 | Output translated sentence: “The engineer designed the bridge.” (No change in this case) or “The bridge designer designed the bridge” (if the context suggests a different emphasis). |

This flowchart illustrates the core components of a gender-neutral translation system.

Proposed Future Research Path

Future research should focus on developing a comprehensive framework for evaluating gender bias in machine translation systems. This framework should include metrics for quantifying the level of bias present, as well as methods for comparing the performance of different mitigation strategies.A proposed future research path diagram would show a progression from data analysis and bias identification to the development of gender-neutral translation models, testing and evaluation, and finally, the integration of these models into real-world applications.

Conclusion: Google Translate Gender Specific Translations Languages

In conclusion, Google Translate Gender Specific Translations Languages reveals a crucial area of concern in machine translation. The biases embedded in language data significantly influence the output of translation systems, potentially perpetuating harmful stereotypes. While Google Translate and other systems are striving to address these issues, ongoing research and development are needed to ensure fair and inclusive translations for all communities.

This exploration underscores the importance of critically evaluating AI tools and the crucial role of human intervention in addressing bias in automated translation.