Facebook political ads targeting misinformation polarization is a significant issue. This deep dive explores how targeted ads on Facebook contribute to the spread of false information, fueling political polarization. We’ll examine the strategies used, the impact on public discourse, and potential countermeasures. From fabricated news to manipulated images, the tactics are diverse and sophisticated, highlighting the need for critical thinking and media literacy in the digital age.

The phenomenon of political misinformation is not new, but social media platforms like Facebook have amplified its reach and impact. This article examines the specific mechanisms through which Facebook’s ad platform facilitates the spread of misinformation, aiming to target specific demographics and amplify their effects on public discourse. We will analyze the impact of these campaigns on public perception, trust in institutions, and political division.

Introduction to Political Misinformation

Political misinformation, a pervasive issue in the digital age, refers to the deliberate spread of false or misleading information with the intention of influencing public opinion or manipulating political outcomes. This can take many forms, from fabricated news stories to manipulated images and distorted statistics. The ease of sharing information online, coupled with a lack of critical thinking skills among some, creates fertile ground for the propagation of such material.This deliberate manipulation of information often targets sensitive political issues and can have profound consequences, ranging from eroding public trust in institutions to inciting social division and violence.

Understanding the different forms of misinformation, the role of social media, and the characteristics of misinformation campaigns is crucial for combating its spread and safeguarding democratic processes.

Forms of Political Misinformation

Political misinformation manifests in various ways. Fabricated news stories, often resembling legitimate news articles, are one common tactic. These stories frequently exploit current events or controversies to spread false narratives. Manipulated images, often known as “deepfakes,” can be used to create false or misleading representations of individuals or events. Misleading statistics, while appearing objective, can be deliberately skewed or misinterpreted to support a particular viewpoint.

Role of Social Media Platforms in the Spread of Misinformation

Social media platforms, while facilitating communication and information sharing, have also become crucial vectors for the dissemination of political misinformation. Algorithms designed to promote engagement can inadvertently amplify misleading content, reaching vast audiences quickly. The anonymity and speed of online interactions often contribute to the rapid spread of misinformation without adequate fact-checking. The lack of moderation and accountability on some platforms exacerbates the problem.

Characteristics of Misinformation Campaigns

Understanding the characteristics of misinformation campaigns is vital for identifying and countering them. This analysis aids in detecting patterns and recognizing tactics commonly employed by purveyors of false information.

| Type of Misinformation Campaign | Characteristics | Example |

|---|---|---|

| Fabricated News | Creates entirely false news stories, often with fabricated sources and details. Mimics legitimate news format. | A fabricated story claiming a political candidate committed a crime, with fabricated quotes and witness testimonies. |

| Manipulated Images/Videos | Uses photo editing or video manipulation techniques to create false or misleading representations of people or events. | A deepfake video showing a political figure saying something they never actually said. |

| Misleading Statistics | Presents data in a way that misrepresents or distorts the truth. Often involves cherry-picking data points or ignoring context. | A misleading poll that excludes certain demographic groups, creating a skewed representation of public opinion. |

Facebook’s Role in Political Polarization

Facebook’s role in shaping public discourse, particularly regarding political issues, is undeniable. The platform’s vast user base and sophisticated algorithms create an environment where political viewpoints can be amplified, sometimes exacerbating existing divisions and contributing to polarization. This influence extends beyond simply facilitating communication; Facebook’s design and operational features actively shape how users perceive and interact with political information.The platform’s design, while intended to connect people, can inadvertently contribute to the reinforcement of existing beliefs and the formation of echo chambers.

This is further compounded by the way political advertising is structured and targeted, which can lead to users encountering primarily content that confirms their existing biases. This phenomenon, coupled with the often-overlooked complexities of algorithmic decision-making, can create an environment conducive to political polarization.

Facebook’s Algorithmic Contributions to Polarization

Facebook’s algorithms, designed to personalize user experiences, can unintentionally contribute to political polarization. The algorithm prioritizes content based on user engagement, including interactions like likes, shares, and comments. This prioritization mechanism can inadvertently create filter bubbles, where users are primarily exposed to information that aligns with their pre-existing views. This lack of exposure to diverse perspectives can reinforce existing beliefs and make it harder for individuals to engage with differing viewpoints.

Facebook’s political ads amplifying misinformation are fueling polarization, a real concern. It’s easy to get caught up in the negativity, but sometimes a good distraction is needed. That’s where the new Amazon Kindle Paperwhite 12 gen e-ink e-reader comes in handy, perfect for escaping the digital noise and immersing yourself in a good book. amazon kindle paperwhite 12 gen e ink e reader However, even with a fantastic new device, the underlying issue of Facebook’s role in spreading misleading information still needs addressing.

It’s a complex problem that requires a multifaceted approach.

This phenomenon is often amplified by the platform’s emphasis on emotional reactions, such as anger or outrage, which can trigger further engagement and solidify entrenched positions.

Impact of Targeted Political Ads on User Engagement and Belief Formation

Targeted political advertising on Facebook has a significant impact on user engagement and belief formation. The platform’s sophisticated targeting options allow advertisers to reach specific demographics, interests, and even individual users with highly tailored messages. This precision can be instrumental in spreading misinformation or reinforcing existing biases, thereby shaping user opinions and potentially contributing to political polarization.The highly personalized nature of these ads can contribute to the creation of echo chambers.

Users are more likely to encounter information confirming their pre-existing political leanings, which can result in a reinforcement of their current beliefs, without exposure to opposing viewpoints. This lack of exposure to a balanced range of perspectives can hinder the development of nuanced understandings of complex issues and, in turn, may escalate political polarization.

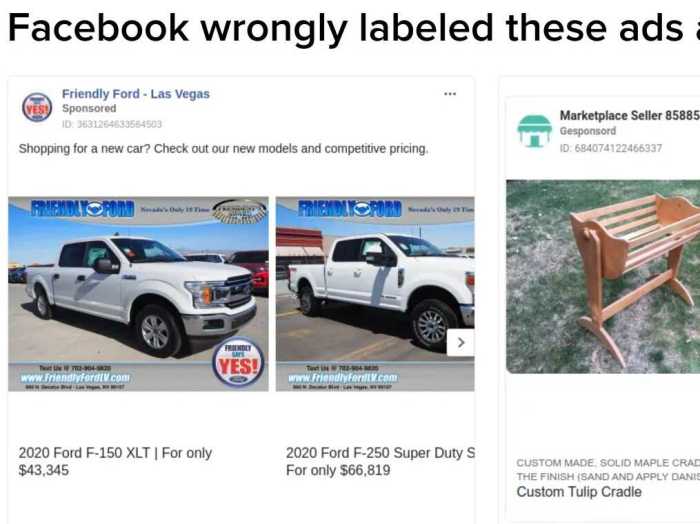

Examples of Facebook Ad Targeting Used to Spread Misinformation

Numerous instances exist where Facebook’s ad targeting capabilities have been exploited to spread misinformation and disinformation. These tactics frequently target specific groups or individuals, often using emotionally charged language or fabricated claims to gain traction.For instance, campaigns that promote false claims about election integrity have used Facebook’s ad targeting to reach specific demographics, such as voters who have previously expressed skepticism about the electoral system.

This form of targeted misinformation can manipulate users and contribute to distrust in democratic processes. Another example involves targeted advertising that attempts to influence public opinion on sensitive social issues. The use of emotional language and the spread of fabricated information can impact public perception and contribute to social division.

Key Features of Facebook’s Ad Targeting System and Misinformation Amplification

| Feature | How it Amplifies Misinformation |

|---|---|

| Demographic Targeting | Allows advertisers to focus on specific groups based on age, location, gender, or other attributes. This allows misinformation to be tailored to specific demographics, increasing the likelihood of reaching susceptible individuals and reinforcing their pre-existing biases. |

| Interest Targeting | Allows advertisers to target users based on their stated interests, hobbies, and behaviors. This enables the spread of misinformation to users who might be more receptive to certain claims or narratives due to pre-existing interests. |

| Behavioral Targeting | Targets users based on their past actions on Facebook, such as likes, shares, and page visits. This allows for the amplification of misinformation to users who have shown a tendency towards certain viewpoints or beliefs, further solidifying existing positions. |

| Custom Audiences | Allows advertisers to upload lists of users to target. This is a powerful tool that allows for the targeting of specific groups of individuals, potentially spreading misinformation to those who are most likely to be susceptible. |

Targeting Misinformation with Ads

Political actors are increasingly leveraging online platforms like Facebook to disseminate misinformation, strategically targeting specific demographics to influence public opinion. This targeted approach, often subtle and sophisticated, relies on understanding the vulnerabilities and predispositions of different groups. The effectiveness of these campaigns varies, but the potential for manipulating public discourse and undermining democratic processes is significant.

Strategies for Targeting Specific Demographics

Political actors employ various strategies to tailor misinformation campaigns to specific demographics. These include exploiting existing social and cultural divides, utilizing emotional appeals, and leveraging pre-existing biases. For instance, campaigns might target individuals concerned about economic hardship with misinformation regarding government policies, or exploit anxieties about immigration with misleading narratives about national security. They often exploit existing social media echo chambers, amplifying messages within groups already predisposed to certain viewpoints.

Facebook’s Ad Platform and Targeting Capabilities

Facebook’s robust ad platform provides unparalleled tools for targeting specific user groups. This includes demographic information like age, location, and interests, as well as detailed psychographic data, obtained through user activity and engagement. Political actors can create highly granular segments based on various criteria, enabling them to tailor messages to specific audiences with incredible precision. This allows for sophisticated microtargeting, reaching individuals who may be more susceptible to misinformation due to their particular values, beliefs, and concerns.

Examples of Misinformation Campaigns, Facebook political ads targeting misinformation polarization

| Campaign Type | Target Audience | Potential Impact on Public Discourse |

|---|---|---|

| Anti-vaccine movement | Parents, particularly those with children of school age, concerned about health and safety | Weakening public trust in scientific consensus, potentially leading to lower vaccination rates and increased risk of preventable diseases. |

| Pro-nationalism campaigns | Individuals with strong nationalistic sentiments, particularly in countries with heightened geopolitical tensions. | Promoting distrust in foreign entities, fueling xenophobia and nationalistic divisions, potentially hindering diplomatic efforts. |

| Economic hardship narratives | Individuals facing economic hardship or job insecurity | Distorting perceptions of government policies and economic realities, possibly inciting public unrest or skepticism of established institutions. |

| Disinformation about political opponents | Individuals supporting the opposing political party, or those wavering in their political affiliations. | Creating a climate of distrust and division, potentially influencing voter turnout or changing electoral outcomes. |

Effectiveness of Targeting Strategies

The effectiveness of these strategies in shaping public opinion is complex and multifaceted. While some campaigns successfully influence perceptions, others fail to gain traction due to exposure and verification efforts. The success of a campaign often hinges on factors such as the quality of the misinformation, the platform’s algorithms, and the susceptibility of the target audience. Studies on the impact of misinformation on public opinion are ongoing and show varying degrees of influence.

There’s evidence that targeted misinformation can indeed affect attitudes and behaviors, but the long-term impact and lasting effects remain to be fully understood.

Impact on Public Discourse: Facebook Political Ads Targeting Misinformation Polarization

Political misinformation campaigns have a profound and often detrimental impact on public discourse, undermining trust in institutions and individuals, and fostering division. The spread of false or misleading information creates an environment where reasoned debate is replaced by emotional responses and entrenched positions. This erosion of trust and the subsequent polarization are significant challenges to a healthy democracy.Misinformation campaigns strategically exploit existing societal fault lines and anxieties.

By tailoring narratives to resonate with specific groups, they can amplify existing biases and prejudices, making it harder for individuals to critically evaluate information and fostering a climate of distrust. This can lead to an environment where truth is relative and often overshadowed by manufactured narratives.

Facebook’s political ads, unfortunately, often contribute to misinformation and polarization. It’s a real problem, and a recent report on Google’s Android One US low-cost phones, google android one us low cost report , highlights the need for affordable tech access, which could potentially help mitigate some of these issues by increasing digital literacy and offering alternative information sources.

Ultimately, though, we still need to address the root causes of misinformation in political advertising on Facebook.

Influence on Public Debate

Political misinformation often frames complex issues in simplistic, easily digestible terms, bypassing the nuances and complexities that characterize genuine debate. This simplification can lead to over-generalizations and the reinforcement of stereotypes. For example, an article that links climate change to fraudulent government activities may encourage distrust in scientific consensus, rather than promoting a nuanced understanding of the issue.

Erosion of Trust in Institutions and Individuals

The proliferation of misinformation can damage the public’s trust in various institutions, from governments and news organizations to academic institutions and even medical professionals. When individuals are exposed to frequent and credible-seeming falsehoods, their confidence in established sources of information diminishes. This erodes the foundation upon which public discourse is built, leading to increased cynicism and a lack of faith in the system.

The spread of misinformation also erodes trust in individual experts and leaders. For instance, if a public figure is repeatedly shown to be disseminating inaccurate information, this can damage their credibility and make it more difficult for them to be seen as trustworthy.

Fostering Political Division and Conflict

Misinformation campaigns can be deliberately designed to sow discord and division among different groups. By emphasizing differences and highlighting perceived threats, they can create an environment ripe for conflict. The spread of false narratives about the intentions of opposing groups can further exacerbate tensions and create an us-versus-them mentality. This can lead to real-world consequences, such as increased social unrest and political instability.

For example, the dissemination of false information about election fraud can lead to protests and distrust in the democratic process.

Effect on Public Perception of Political Issues

Misinformation significantly impacts public perception of political issues. By presenting skewed or entirely fabricated narratives, campaigns can alter public opinion, sometimes in dramatic ways. This can result in the mischaracterization of policies and individuals, making it harder to have meaningful conversations about critical issues. This can create a distorted understanding of the problem, as well as the proposed solutions.

For example, a campaign might misrepresent a policy aimed at improving economic equality as one that will harm the middle class. This misrepresentation can lead to widespread opposition to the policy, regardless of its actual merits.

Methods of Counteracting Misinformation

Combating the spread of misinformation in political ads on Facebook requires a multifaceted approach. Simply removing ads isn’t enough; we need proactive measures to identify and counter false narratives before they gain traction. This involves leveraging technology, fostering media literacy, and empowering users to participate in a more informed online discourse. Understanding the different methods available allows for a more effective strategy to combat the spread of misinformation and promote a healthier political environment.Misinformation in political ads often exploits vulnerabilities in online platforms and algorithms.

Countermeasures need to address these vulnerabilities while simultaneously encouraging critical thinking and media literacy amongst users. The goal is not just to suppress misinformation, but to create a robust online environment that promotes accurate information and fosters constructive dialogue.

Detecting Misinformation in Political Ads

Identifying false or misleading information in political ads requires a combination of automated tools and human analysis. Sophisticated algorithms can scan ad copy, images, and associated links for inconsistencies, comparing them against known facts and databases of verified information. This automated analysis, while helpful, needs human oversight to evaluate context and nuances that might be missed by algorithms.

Furthermore, understanding the source of the misinformation and its intended audience is crucial to crafting effective countermeasures.

Role of Fact-Checking Organizations and Media Outlets

Fact-checking organizations and reputable media outlets play a critical role in combating misinformation. They provide independent verification of claims made in political ads, debunking false information and providing accurate context. This process builds public trust and empowers citizens to make informed decisions. Their work serves as a vital counterbalance to the often rapid spread of misinformation. Examples include the work of organizations like Snopes, PolitiFact, and FactCheck.org, which analyze claims made in political ads and provide independent assessments of their veracity.

Potential of User-Generated Content and Community Engagement

User-generated content and community engagement can be powerful tools in countering misinformation. Actively encouraging users to share accurate information and engage in constructive dialogue can help counteract the spread of false narratives. Platforms can promote user-generated fact-checks, create forums for discussing political issues, and reward users for sharing accurate information. This fosters a culture of critical thinking and informed discussion.

For example, Facebook groups dedicated to discussing local elections could be moderated to ensure accurate information is shared. This method encourages community engagement and promotes a more robust understanding of the political issues.

Table of Approaches to Combat Misinformation in Online Political Ads

| Approach | Description | Effectiveness |

|---|---|---|

| Automated Fact-Checking | Algorithms analyze ad content for inconsistencies with verified information. | High potential for speed and scale, but may miss nuanced or complex cases. |

| Human Fact-Checking | Independent fact-checkers verify claims and provide detailed analysis. | High accuracy, but slower and may not cover all instances. |

| User-Generated Content Promotion | Encourage users to share accurate information and engage in constructive dialogue. | Can build a strong community response, but requires effective moderation to prevent the spread of misinformation. |

| Media Literacy Initiatives | Educating users about identifying and evaluating sources of information. | Long-term solution that builds critical thinking skills, but requires sustained effort. |

| Platform Policies and Moderation | Implementing stricter policies against the spread of misinformation and actively moderating content. | Essential for controlling the spread of harmful misinformation, but may be challenged by the scale and dynamism of the issue. |

Case Studies of Misinformation Campaigns

Dissecting the impact of misinformation campaigns on Facebook requires examining real-world examples. These campaigns, often meticulously crafted and targeted, can have a profound effect on public opinion, potentially influencing voting behavior and societal divisions. Understanding the tactics employed and the consequences faced by specific communities provides crucial insight into the mechanisms driving polarization and the necessity for effective countermeasures.

Facebook’s political ad targeting, often rife with misinformation, is fueling polarization. It’s a real shame, considering how much time and energy are wasted on these issues. But, sometimes, it’s good to step back and appreciate the finer things in life, like the bonus items in the Demons Souls PS5 Deluxe Edition, including the soundtrack demons souls ps5 deluxe edition bonus items soundtrack.

Still, the insidious nature of misinformation campaigns on Facebook continues to be a concerning issue, regardless of the amazing bonus content from video game releases.

Examples of Misinformation Campaigns on Facebook

Numerous campaigns have leveraged Facebook’s platform to spread false or misleading information. These efforts often exploit existing social divisions, using emotionally charged language and targeted messaging to resonate with specific demographics. Analyzing these campaigns reveals the various strategies employed and the consequences of their actions.

Impact on Specific Demographics and Communities

Misinformation campaigns frequently target vulnerable populations or those with pre-existing biases. This targeted approach often plays on anxieties or fears, making individuals more susceptible to false narratives. The impact can be seen in heightened distrust of institutions, increased social division, and, in some cases, a significant shift in public opinion within specific communities. For instance, false claims about election fraud can instill a sense of insecurity and distrust in the democratic process, especially within communities already experiencing political polarization.

Influence on Public Opinion and Voting Behavior

The influence of these campaigns on public opinion and voting behavior is a complex issue. While definitive causality is challenging to establish, research consistently demonstrates a correlation between exposure to misinformation and changes in public opinion. The campaigns’ influence on voting behavior is also evident, as individuals may be swayed by false information, impacting their choices at the polls.

This can result in significant shifts in electoral outcomes and societal polarization.

Characteristics and Outcomes of Misinformation Campaigns

| Campaign | Key Characteristics | Target Demographics | Outcomes |

|---|---|---|---|

| “Russia 2016 Interference” | Pro-Kremlin accounts, coordinated in-authentic behavior, impersonation, and the spread of false information. | U.S. Citizens, particularly those on social media platforms | Erosion of trust in news media, increased political division, and influence on voting patterns. |

| “COVID-19 Vaccine Hoaxes” | Dissemination of false information about vaccine safety and efficacy, often exploiting anti-establishment sentiments. | Individuals hesitant about vaccination, especially those in conservative communities. | Reduced vaccination rates, spread of fear and distrust towards medical experts, and potential increase in health risks. |

| “Climate Change Denial” | Promoting misinformation about the severity and causes of climate change, often employing skepticism and doubt-mongering tactics. | People skeptical of climate change science and policies, potentially impacting public support for environmental initiatives. | Delay in climate action, decreased public support for climate policies, and further polarization of environmental issues. |

Illustrative Examples of Misinformation

Misinformation, often intentionally designed to mislead, poses a significant threat to informed public discourse and can have far-reaching consequences. Understanding the tactics employed in spreading misinformation is crucial to combating its influence. This section will provide specific examples of misinformation campaigns targeting particular social groups, highlighting how these campaigns were executed and their impact.The spread of misinformation often relies on exploiting existing societal divisions and anxieties.

This can manifest in various forms, from subtle manipulation of language to the creation of fabricated narratives. Understanding these strategies is vital for recognizing and countering the influence of such campaigns.

Specific Instances Targeting Political Groups

Misinformation campaigns often exploit existing political divisions, targeting specific groups with tailored narratives. These narratives can range from outright fabrications to the selective presentation of facts to support a desired conclusion. The following examples demonstrate the nature of these targeted campaigns.

- Campaign Targeting Environmental Concerns: A fabricated narrative suggesting that renewable energy sources are harmful to public health, and that fossil fuels are environmentally safe. This misinformation was disseminated on social media platforms through targeted advertisements and posts by accounts posing as concerned citizens. The targeted groups included those who held skeptical views on environmental issues, and those with limited knowledge on the scientific consensus regarding climate change.

This misinformation influenced the target group by creating doubt and distrust in scientific data and environmental organizations. The platforms used included Facebook, Instagram, and YouTube. Strategies included creating fake profiles, using emotionally charged language, and employing the “astroturfing” tactic of mimicking public support. The result was the erosion of public trust in scientific consensus on climate change and the fostering of distrust in environmental movements.

- Campaign Targeting Immigrant Communities: The spread of false claims about immigrants taking jobs away from native-born citizens was a common tactic. These narratives were often presented as news articles, shared on Facebook, and re-posted on other social media platforms. The targeted groups included those with existing anxieties about immigration and economic issues. This misinformation influenced the target groups by increasing fear and prejudice towards immigrants.

The platforms involved often included Facebook, Twitter, and smaller, niche websites. Strategies employed included the use of provocative headlines, the promotion of inflammatory rhetoric, and the use of false statistics.

Characteristics of Platforms Used for Dissemination

The platforms used to disseminate misinformation play a significant role in its impact. Understanding their features and vulnerabilities is key to effective countermeasures.

- Social Media Platforms: Social media platforms often have features that facilitate rapid dissemination of content. Algorithms designed to prioritize engagement over factual accuracy can contribute to the spread of misinformation. The lack of robust fact-checking mechanisms on these platforms allows false information to persist and gain traction. Platforms like Facebook, Twitter, and Instagram are particularly vulnerable because of their user-generated content model and their large user bases.

- News Aggregators: News aggregators often curate content from various sources, including those known for spreading misinformation. The aggregation process can obscure the original source of the misinformation, making it harder for users to determine the reliability of the information. This process can unintentionally amplify the reach of false information.

Strategies Employed to Spread Misinformation

Misinformation campaigns often use specific strategies to target audiences and manipulate public perception. These strategies often include the use of psychological manipulation techniques and sophisticated social engineering methods.

- Emotional Appeals: Misinformation frequently leverages strong emotions such as fear, anger, or excitement to gain traction. The use of emotionally charged language, images, and narratives can evoke a strong response from the audience, increasing the likelihood of sharing the information.

- Creating a Sense of Urgency: Creating a sense of urgency can increase the likelihood of people sharing misinformation. The idea that information is “breaking” or “time-sensitive” encourages rapid dissemination before verification is possible.

Last Point

In conclusion, Facebook political ads targeting misinformation polarization poses a serious threat to informed public discourse. The detailed analysis of misinformation strategies, targeting techniques, and real-world case studies reveals the extent of the problem. While no single solution exists, the collaborative effort of fact-checkers, media outlets, and users is crucial to mitigating the spread of misinformation. This underscores the urgent need for stronger regulations and enhanced media literacy programs to combat this growing threat.