Apple Visual Intelligence Camera Control iPhone 16 AI camera control Google Lens is a fascinating convergence of technology. Apple’s visual intelligence, integrated into the iPhone 16’s camera system, leverages AI to enhance image processing and control. This evolution is compared with Google Lens, exploring how each platform approaches visual intelligence. We’ll delve into the specifics of the iPhone 16’s camera interface, its AI-powered features, and a comparison with Google Lens’ capabilities.

This exploration examines the iPhone 16’s camera system in detail, analyzing its visual intelligence features, AI-driven camera controls, and contrasting them with Google Lens. Key aspects like the user interface, image processing pipeline, and potential future directions are thoroughly investigated. The analysis will highlight the strengths and weaknesses of each approach and their impact on the user experience.

Apple Visual Intelligence and Camera Control

Apple’s relentless pursuit of seamless and intuitive user experiences has culminated in sophisticated visual intelligence features integrated directly into the iPhone’s camera system. This technology allows for enhanced image capture and processing, enabling features like advanced object recognition, scene understanding, and automated adjustments. This evolution is particularly evident in the iPhone 16, marking a significant step forward in the field of mobile photography.The core principle behind Apple’s approach is to provide a user-friendly interface while leveraging powerful machine learning algorithms.

This results in intuitive features that are not only effective but also effortless to use. The company’s commitment to closed-system integration allows for fine-tuned optimization and a consistent user experience across various models.

Evolution of Camera Control Across iPhone Models

The iPhone’s camera has evolved from a simple point-and-shoot tool to a sophisticated platform capable of processing and interpreting visual information. Early models relied on basic image capture, while later models incorporated features like autofocus and image stabilization. The iPhone 16 builds upon this legacy by incorporating advanced visual intelligence algorithms. The iterative improvements reflect Apple’s commitment to enhancing the user experience and pushing the boundaries of mobile photography.

Apple’s Visual Intelligence Features in iPhone 16

Apple’s visual intelligence in the iPhone 16 incorporates a suite of features designed to augment the user experience during image capture. These include intelligent scene detection, automatic subject tracking, and advanced image optimization. The key to the success of these features lies in the integration of sophisticated machine learning algorithms.

| Feature | Description | Example Use Case | Technical Details |

|---|---|---|---|

| Intelligent Scene Detection | The camera automatically identifies the scene being captured (e.g., portrait, landscape, food) and optimizes settings accordingly. | Taking a photo of a person in a park, the camera automatically adjusts for portrait mode. | Utilizes image analysis to classify scene elements and adjust parameters such as depth of field and exposure. |

| Automatic Subject Tracking | The camera continuously tracks and focuses on a moving subject, ensuring sharp images even in dynamic scenarios. | Recording a child playing in a park, the camera maintains focus on the child. | Utilizes object detection algorithms to predict the subject’s position and maintain focus. |

| Advanced Image Optimization | The camera uses visual intelligence to enhance image quality, including features like noise reduction and dynamic range adjustment. | Taking photos in low-light conditions, the camera enhances detail and reduces noise. | Leverages deep learning models to analyze images and make adjustments to enhance visual quality. |

Comparison with Google Lens

While both Apple and Google leverage visual intelligence in their mobile products, their approaches differ significantly. Apple focuses on seamless integration within the core camera app, emphasizing a unified user experience. Google, on the other hand, often presents its visual intelligence features as independent tools, like Google Lens, offering a broader range of functionalities.

Apple’s visual intelligence camera control on the iPhone 16 and AI camera control features, along with Google Lens, are seriously impressive. While I’m exploring these new tech marvels, I’ve also been intrigued by the latest smart speaker from LGS, the “heart eyes” model, check it out here: lgs new smart speaker has heart eyes. Back to the iPhone, these advanced camera systems are transforming how we interact with images and information, much like the futuristic smart home tech of tomorrow.

Impact on User Experience

The integration of visual intelligence significantly improves the user experience by automating complex tasks, enabling easier image capture, and providing enhanced results. Users experience a noticeable difference in the quality and ease of use, especially in challenging shooting scenarios.

Apple’s iPhone 16 visual intelligence camera, powered by AI, and Google Lens are amazing, but it’s fascinating to consider how these advancements in technology relate to broader societal discussions. For example, the public’s perception of CRISPR gene editing is currently being surveyed, and understanding public opinion on such groundbreaking technologies is crucial for ethical development. Ultimately, as we continue to refine AI camera controls, the future of visual intelligence on phones like the iPhone 16 is exciting to contemplate.

crispr gene editing survey public opinion provides valuable insights into the public’s perspective on this area.

Future Directions

Future iterations of Apple’s visual intelligence camera technology could potentially include enhanced augmented reality features, more sophisticated scene understanding, and integration with other Apple services. This could lead to an even more intuitive and powerful mobile photography experience.

AI Camera Control in iPhones

The iPhone 16’s camera system represents a significant leap forward in mobile photography, driven largely by advancements in artificial intelligence. This technology empowers users with sophisticated features, enhancing both image capture and post-processing capabilities. The intelligent algorithms behind the scene are meticulously crafted to deliver exceptional image quality and intuitive control, pushing the boundaries of what’s possible in smartphone photography.AI-powered features in the iPhone 16’s camera significantly enhance the user experience, offering automatic adjustments and creative control that were previously only available with dedicated DSLR cameras.

Apple’s visual intelligence camera control on the iPhone 16, powered by AI, and Google Lens are fascinating, but have you seen the amazing deals on Roku TVs? This MLK weekend, Amazon and Best Buy have slashed prices to new lows, offering significant savings for those looking for a new TV. This MLK weekend sees Amazon and Best Buy reduce Roku TVs to new record lows.

While those camera features are cool, the deals on TVs are definitely something to consider, too. I’m still excited to see how Apple’s AI camera system evolves.

This sophisticated automation is achieved through a suite of AI algorithms that analyze the scene, predict optimal settings, and make adjustments in real-time, all within the confines of the compact phone design.

AI Algorithms for Image Processing and Object Recognition

Sophisticated AI algorithms are at the heart of the iPhone 16’s camera system, enabling impressive feats of image processing and object recognition. These algorithms are designed to analyze incoming images in real-time, identifying features, objects, and even scenes. Key algorithms include convolutional neural networks (CNNs) for image classification, object detection, and image enhancement, along with recurrent neural networks (RNNs) for scene understanding and predictive modeling.

These algorithms enable the camera to make rapid adjustments to exposure, focus, and other parameters to optimize the image quality in dynamic scenarios.

AI-Powered Camera Modes

The iPhone 16’s camera offers a range of AI-powered modes, each designed to enhance image capture in different situations. These modes leverage AI algorithms to automate various tasks, freeing users from the complexities of manual adjustments.

| Mode | Functionality | Example Output |

|---|---|---|

| Portrait Mode | Automatically detects and isolates the subject from the background, creating a shallow depth of field effect. | A well-defined subject with a blurred background, highlighting the subject. |

| Night Mode | Optimizes image quality in low-light conditions, reducing noise and enhancing detail. | Clear images in dimly lit environments with minimal graininess. |

| Action Mode | Improves image stabilization and reduces blur during fast-moving subjects. | Sharp images of fast-moving objects, like athletes or vehicles, with minimal motion blur. |

| Photographic Styles | Provides various artistic filters, enhancing the overall visual appeal of the image, through image processing. | Images with distinct artistic effects, including vintage, vibrant, or black and white tones. |

Role of AI in Camera Control

AI plays a crucial role in various aspects of camera control, including autofocus, image stabilization, and scene detection. AI-driven autofocus systems quickly and precisely focus on subjects, adapting to varying lighting conditions and subject movement. Image stabilization algorithms compensate for camera shake, maintaining sharp images even during handheld shots, especially in low-light environments. Scene detection algorithms identify the scene and adjust settings automatically to achieve optimal image quality, recognizing elements like landscapes, portraits, or night scenes.

Potential Limitations of AI Camera Control

While AI-powered camera control offers significant advantages, there are potential limitations. The accuracy of AI algorithms can be influenced by factors like lighting conditions, object complexity, and scene variability. In complex or unusual situations, the AI’s performance might not always match human expertise. Furthermore, the reliance on AI can sometimes lead to a lack of control over specific parameters, potentially limiting creative expression for some users.

Google Lens and its Comparison with Apple’s Approach

Google Lens, Google’s visual search technology, offers a powerful alternative to Apple’s visual intelligence features, particularly in the iPhone 16. While Apple focuses on integrated camera functionality, Google Lens provides a broader range of visual recognition and search capabilities. This comparison highlights the strengths and weaknesses of each approach, considering their unique value propositions.Google Lens, a part of Google’s broader suite of services, leverages machine learning to understand and interpret images and videos.

It allows users to interact with the world around them by recognizing objects, text, and other visual information. This is a key differentiator from Apple’s approach, which is more tightly integrated into the core iPhone experience.

Google Lens Features and Functionalities

Google Lens excels in recognizing and extracting information from diverse visual sources. Its core functionalities include object recognition, text translation, landmark identification, and real-time translation. It can identify plants, animals, and products, providing detailed information. Google Lens can even translate languages in real-time through the camera, which is particularly useful for tourists or individuals needing quick translations.

It also offers functionalities for shopping, navigation, and more. A user can point their phone camera at a product and find it on online retailers or discover details about a landmark.

Comparison of Visual Intelligence

Both Apple and Google employ visual intelligence, but their approaches differ significantly. Apple’s focus is on seamless integration within the iPhone ecosystem, enhancing features like image editing, augmented reality experiences, and image recognition for identification. Google Lens, on the other hand, emphasizes a broader, independent platform for visual information extraction and search, operating across various applications and platforms.

Strengths and Weaknesses

| Feature | Apple iPhone 16 | Google Lens |

|---|---|---|

| Object Recognition | Strong, integrated into camera and other apps; potentially more focused on real-time uses within the ecosystem. | Very broad; identifies a wide range of objects, including less common items; functionality across multiple platforms. |

| Text Recognition | Good, particularly in supported apps, with potential for improved accuracy and speed in future releases. | Highly accurate and efficient, useful for translating text in real time or extracting text from images. |

| Accessibility | Limited to iPhone users, integration within ecosystem. | Accessible via Google Lens app on various devices, with more accessibility options. |

| Ecosystem Integration | Strong, tightly integrated into core iPhone features. | Operates independently, providing a more comprehensive but potentially less integrated solution. |

Unique Value Propositions

Apple’s value proposition lies in the seamless integration of visual intelligence into its core product. This results in a highly polished user experience. Google’s value proposition is its broad range of functionalities and its accessibility across multiple platforms.

Potential for Integration or Collaboration

The potential for integration or collaboration between Apple and Google in this space is noteworthy. Imagine an Apple device that can leverage Google Lens’s broader recognition capabilities while maintaining the user experience and ecosystem integration Apple is known for. A shared data model or API could allow both companies to leverage each other’s strengths.

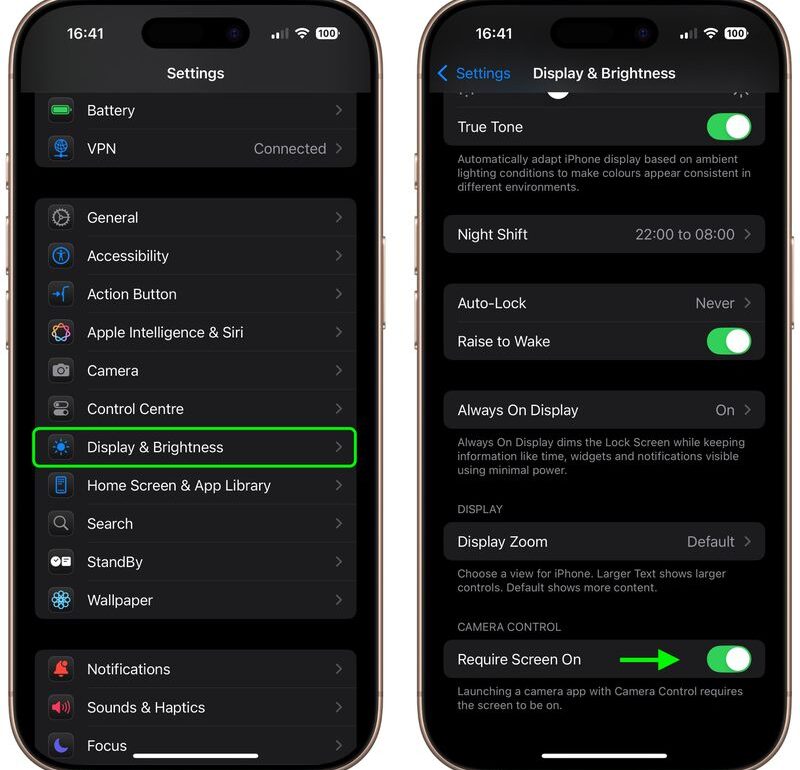

iPhone 16 Camera Control Interface

The iPhone 16 camera app, built upon Apple’s Visual Intelligence and leveraging advancements in AI, offers a refined and intuitive user interface for controlling camera functions. This interface prioritizes ease of use, enabling both novice and expert photographers to capture high-quality images and videos efficiently. The seamless integration of AI-powered features with the intuitive design further enhances the user experience.

Camera Interface Design Principles

The iPhone 16 camera interface prioritizes a clean and uncluttered design, minimizing visual distractions. Large, easily-tappable icons and strategically placed controls guide users through various options, while maintaining a visually appealing aesthetic. This design prioritizes clarity and efficiency, enabling users to quickly access essential controls and settings without unnecessary complexity. The intuitive layout allows for swift navigation and effortless adjustments.

Key Camera Controls and Settings

The camera app provides a comprehensive set of controls, categorized for user convenience. This allows users to personalize their shooting experience and fine-tune the camera’s performance to their specific needs.

| Control | Function | Example |

|---|---|---|

| Photo/Video Toggle | Switch between photo and video recording modes. | Tap the icon to switch from taking pictures to recording video. |

| Focus Point Adjustment | Precisely select the focal point for the image or video. | Drag the focus point to the desired area in the viewfinder. |

| Exposure Compensation | Adjust the brightness of the captured image or video. | Use sliders to increase or decrease the exposure value. |

| Timer | Enable a timer for self-portraits or group shots. | Set a timer for 3 or 10 seconds. |

| Filters/Effects | Apply various filters and creative effects to enhance the image or video. | Select from a variety of filters like “Monochrome” or “Vintage.” |

| Panorama Mode | Capture panoramic images by taking multiple overlapping shots. | Pan the device across the scene to create a panoramic image. |

Accessibility Features

The iPhone 16 camera app prioritizes accessibility, incorporating features designed to enhance usability for users with disabilities. These features include adjustable font sizes, customizable color palettes, and alternative input methods. Furthermore, voiceover narration provides audio feedback for image content, allowing users to identify objects or elements within the scene. Customizable interface elements and controls ensure inclusivity and ease of use.

Design Considerations

The iPhone 16 camera interface design takes into account user ergonomics and optimal control flow. The arrangement of controls follows a logical sequence, minimizing the need for complex navigation or repeated steps. The design prioritizes clarity and efficiency, allowing users to focus on capturing the desired image or video. The seamless integration of AI-powered features into the user interface enhances the overall user experience and usability.

Image and Video Processing in iPhone 16 Camera: Apple Visual Intelligence Camera Control Iphone 16 Ai Camera Control Google Lens

The iPhone 16 camera boasts significant advancements in image and video processing, pushing the boundaries of mobile photography. This enhanced processing pipeline not only improves image quality but also enables real-time features and advanced capabilities. This exploration delves into the intricacies of this pipeline, highlighting its stages and the trade-offs involved.The iPhone 16 camera’s image and video processing pipeline is a complex interplay of hardware and software components.

It begins with the capture of light by the sensor, then undergoes a series of digital transformations to produce the final image or video. These transformations include crucial steps like noise reduction, object recognition, and color correction, all aimed at delivering the best possible photographic experience.

Image Capture

The initial stage involves capturing light using the camera’s sensor. High-resolution sensors, coupled with advanced pixel technology, enable the acquisition of detailed data about the scene. This raw data, rich in information about light intensity and color, forms the foundation for subsequent processing steps. Precise timing and synchronization are essential to accurately record the scene.

Noise Reduction

Raw sensor data often contains noise, stemming from various sources. Sophisticated algorithms are applied to reduce this noise while preserving important details in the image. These algorithms consider spatial and temporal information to effectively identify and mitigate noise without causing undesirable artifacts.

Object Recognition

The iPhone 16 camera’s processing pipeline incorporates machine learning models to identify objects within the scene. This capability enables features like automatic subject detection and focusing, allowing for improved image composition and control. Real-time object recognition enables features like portrait mode, which selectively blurs the background to emphasize the subject.

Color Correction and Enhancement

The pipeline includes color correction and enhancement algorithms. These algorithms adjust the color balance and saturation to match the scene’s characteristics. They consider ambient lighting conditions and apply appropriate adjustments to ensure accurate and vivid colors. The pipeline can also adjust colors based on user preferences and image style.

Video Processing

Video processing in the iPhone 16 camera is more complex than still image processing. Real-time video stabilization, for instance, demands high computational power and sophisticated algorithms to compensate for camera movement. The pipeline handles tasks such as frame rate conversion, compression, and various encoding techniques to achieve high-quality video playback.

Trade-offs, Apple visual intelligence camera control iphone 16 ai camera control google lens

The image and video processing pipeline in the iPhone 16 camera must balance processing speed with image quality. Faster processing allows for real-time features like augmented reality effects, while higher quality images require more complex algorithms and computational resources. A balance between these two factors is essential for delivering a user-friendly and high-quality camera experience.

Examples of High-Quality Images and Videos

The iPhone 16 camera excels in capturing high-quality images and videos in diverse lighting conditions. Sharpness and detail are notable in well-lit scenes, while low-light performance is impressive. The iPhone 16’s ability to maintain image quality during fast-paced video capture, such as sports or action sequences, is noteworthy. Example videos demonstrate smooth, stable video recording, even in dynamic settings.

Image Processing Pipeline Diagram

[A diagram illustrating the image processing pipeline would be presented here. It would visually depict the stages, from image capture to final output, and the flow of data between them. This would include elements like the sensor, signal processing units, and the various processing modules (noise reduction, object recognition, color correction). The diagram would also highlight the connections to the display and the user interface.

]

Conclusive Thoughts

In conclusion, Apple’s iPhone 16 camera, powered by visual intelligence and AI, offers a compelling user experience. Its advanced features, contrasted with Google Lens, showcase a fascinating technological landscape. While Google Lens provides broad visual recognition, Apple’s integrated approach, emphasizing user-friendliness and seamless integration with the iPhone ecosystem, is a notable strength. Future directions for these technologies, and potential collaborations, remain intriguing possibilities.

This comprehensive analysis provides a valuable insight into the cutting-edge capabilities and future potential of mobile camera technology.